.webp)

EdgeAI Cluster

Your multi-rack AI clusters to scale training and deliver inference at teraflops.

98% savings over

Google Cloud

Google Cloud

No hourly rates,

no usage limits.

no usage limits.

100%

private

private

On-premises deployment keeps your data secure.

Up to 768GB

GPU RAM

GPU RAM

Train and run models at scale from 16-32 cards RTX 4090D configuration.

Cloud bills vs EdgeAI.

Deploy once. Run thousands of models without watching the meter.

Your data stays in your loop.

Run AI workloads locally. No internet required. No data leaves your infrastructure.

.webp)

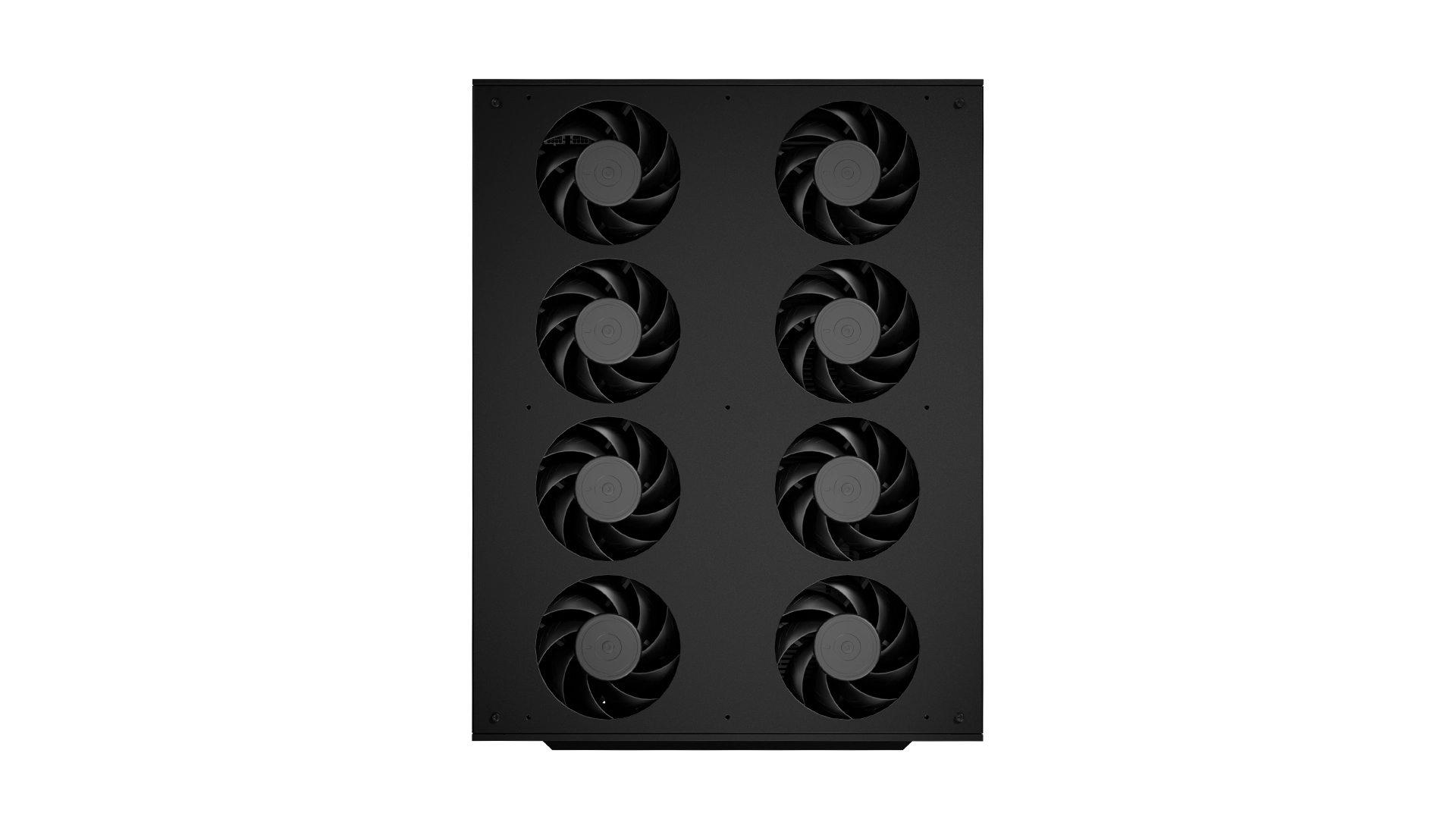

Built for 24/7 run.

Ready for AI training, inference and accelerated MLOps workflows with dedicated multi-GPU infrastructure.

Keep cards cool. 16 fans, 1600 vents.

I/O for AI workflows.

3x 1Gb LAN

2x 10Gb LAN

8x HDMI port

24x DP port

1x VGA port

4x USB Type-A (USB3.2 Gen1)

2x 10Gb LAN

8x HDMI port

24x DP port

1x VGA port

4x USB Type-A (USB3.2 Gen1)

.webp)

Built to flow. Geometric pattern channels airflow like ancient pyramid ventilation. Each triangle houses your computational power.

RTX 4090D for the best performance per dollar.

Deliver over a petaflop of AI performance. It empowers your team with scalable inference, fine-tuned models, agent systems, and heavy compute tasks.

Up to

768 GB

GDDR6X VRAM memory

4704 TFLOPS

F32 tensor performance

32,256 GB/s

memory bandwidth

14,952

CUDA cores

512

Tensor cores

64 GB/s

PCle 4.0 x 16 bandwidth

And that's fast.

RTX 4090 is 3x faster than Apple M2 Ultra for demanding AI workloads.

Accelerated Development

Enhanced Productivity

Content Generation

Immersive Gaming

Relative Performance (tokens per second)

Model Training/Fine Tuning of BERT-Base-Cased, GeForce RTX 4090 using mixed precision | Code assist is Code llama 13B Int4 inference performance INSEQ=100, OUTSEQ=100 batch size 1

SourceNVIDIA

Run the models that matter.

Most popular models work out of the box. No vendor lock-in.

8B • 70B • 405B

Meta's flagship reasoning model

7B • 13B • 34B

Meta's flagship reasoning model

7B • 22B

Fast and efficient for production

9B • 27B

Google's lightweight powerhouse

7B • 14B • 32B

Strong multilingual performance

6.7B • 33B

Built specifically for developers

Want a demo of our plug-in AI Cluster?

.svg)