Table of Contents

Deep learning is a specialized area of machine learning that uses multi-layered neural networks to process vast amounts of data and uncover intricate patterns. Unlike traditional machine learning, which typically relies on structured datasets and simpler models, deep learning models excel at tasks like image recognition, language processing, and autonomous driving by processing large, unstructured data such as images, audio, and video.

Deep learning models require vast computational power to train, as they involve processing millions (or even billions) of parameters. Training a deep learning model is a time-consuming and resource-intensive task that demands significant processing capabilities. This is where GPUs (Graphics Processing Units) come into play.

Why CPUs Aren’t Enough for Deep Learning

While CPUs (Central Processing Units) are designed for general-purpose tasks and can handle sequential computations, deep learning requires handling multiple calculations simultaneously, which is where GPUs excel. GPUs are designed for parallel processing, allowing them to manage thousands of tasks concurrently. This makes them perfect for deep learning tasks that involve training models with large datasets.

For a better understanding of AI PCs and their role in deep learning, explore the various computing power features they offer. Additionally, a personal AI assistant for PCs can help streamline these tasks by optimizing workflows and managing heavy computational processes.

The Role of GPUs in Deep Learning

GPUs specialize in accelerating deep learning by using specialized hardware components like CUDA cores and Tensor cores. These cores enable GPUs to handle complex mathematical operations, such as matrix multiplications, at much faster speeds compared to CPUs. In simple terms, GPUs can process large amounts of data and computations in parallel, speeding up the entire deep learning training process.

In deep learning, the training phase often takes days, weeks, or even months on CPUs. However, with the parallel processing capabilities of GPUs, these same tasks can be completed in a fraction of the time, making them indispensable for deep learning workflows.

For more detailed information on GPU vs CPU for AI and how GPUs outperform CPUs in deep learning, check out our comparison of both processing units.

Top Features to Look for in the Best GPU for Deep Learning

When choosing the best GPU for deep learning, there are several key features that you must consider to ensure optimal performance for your tasks. Below are the most critical aspects to evaluate:

1. CUDA Cores

CUDA cores (Compute Unified Device Architecture) are the building blocks of NVIDIA GPUs. They are designed to handle parallel tasks and computations, which is essential for deep learning. The more CUDA cores a GPU has, the more tasks it can handle simultaneously, making it ideal for training deep learning models. Generally, the higher the number of CUDA cores, the faster and more efficient the GPU will be at handling large datasets and complex model computations.

2. Tensor Cores

Tensor cores are specialized hardware components within NVIDIA GPUs that are specifically designed to accelerate deep learning tasks. Tensor cores perform calculations related to matrix multiplications, which are critical for training deep learning models, especially in tasks like convolutional neural networks (CNNs) and recurrent neural networks (RNNs). GPUs with more tensor cores are better suited for high-performance deep learning tasks, particularly when working with large datasets.

3. VRAM (Video RAM)

VRAM is the memory used by the GPU to store data, models, and weights during training. The more VRAM a GPU has, the better it will be at handling large models and datasets without running into memory limitations. For deep learning, it's essential to have a GPU with a substantial amount of VRAM—typically, 16GB or more. Higher VRAM allows for faster data processing and smoother training of larger models.

4. Memory Bandwidth

Memory bandwidth determines how quickly data can be transferred between the GPU’s memory and its cores. A higher memory bandwidth allows for faster data processing, which is especially important when training deep learning models that need to access large datasets quickly. Memory bandwidth is typically measured in GB/s, and GPUs with higher memory bandwidth can handle more data at once, reducing the time it takes to train deep learning models.

5. Compute Performance (TFLOPS)

TFLOPS (Tera Floating-Point Operations Per Second) is a unit of measure for GPU processing power. The higher the TFLOPS rating, the more computations a GPU can perform per second. For deep learning, higher TFLOPS means the GPU can perform more calculations simultaneously, speeding up model training. Look for GPUs that offer high TFLOPS to ensure efficient and fast training of large, complex models.

6. Compatibility with Deep Learning Frameworks

Ensure that the GPU is compatible with popular deep learning frameworks like TensorFlow, PyTorch, and Keras. NVIDIA’s CUDA and cuDNN libraries provide seamless integration with these frameworks, which is why NVIDIA GPUs are generally preferred for deep learning tasks. Proper compatibility ensures that the GPU works efficiently with the framework, accelerating model training and making the process more reliable.

Top Picks for the Best Deep Learning GPUs

1. NVIDIA H100

- Why it’s suitable:

The NVIDIA H100 is the top choice for large-scale AI and deep learning models. With its Hopper architecture, HBM3 memory (188GB), and exceptional TFLOPS performance (3,958 for FP16 Tensor cores), it can handle the most complex deep learning tasks, including training large language models (LLMs).

- Use case:

Best for high-performance computing in AI research, large-scale deep learning, and enterprise-level AI model training.

- Verdict:

Ideal for researchers and enterprises working with massive datasets and complex models. However, it's a premium choice with a high cost.

2. NVIDIA A100 Tensor Core

- Why it’s suitable:

The NVIDIA A100 is optimized for AI training, and it supports multi-instance GPU (MIG), making it ideal for large-scale AI model training. It has 80GB of HBM2 memory and offers great versatility for deep learning applications.

- Use case:

Used extensively in AI training, cloud AI infrastructure, and big data analytics.

- Verdict:

High performance at a slightly lower cost than the H100, making it suitable for enterprises and researchers with large deep learning projects.

3. NVIDIA RTX 4090

- Why it’s suitable:

The RTX 4090 is a consumer-grade GPU, but it still provides powerful parallel processing for deep learning tasks. With 24GB of GDDR6X VRAM and fast memory bandwidth, it is capable of handling image processing, object detection, and NLP tasks.

- Use case:

Ideal for mid- to high-level deep learning projects, such as computer vision, image recognition, and AI model fine-tuning.

- Verdict:

Best for those with a larger budget, needing a powerful GPU for personal research, or teams working on deep learning models.

4. NVIDIA RTX A6000 Tensor Core GPU

- Why it’s suitable:

The RTX A6000 is a professional GPU designed for deep learning, AI model training, and rendering. It has 48GB of GDDR6 memory and tensor cores that accelerate training of AI models and machine learning workloads.

- Use case:

Ideal for deep learning researchers and AI professionals working on both deep learning models and real-time applications.

- Verdict:

Balanced for performance and cost, perfect for professional teams in AI research.

5. AMD Radeon Instinct MI300X

- Why it’s suitable:

AMD’s MI300X is a strong performer for high-performance computing and AI machine learning tasks. With 192GB of HBM3 memory, it provides powerful performance in large-scale AI applications.

- Use case:

Suitable for deep learning workloads and high-performance computing that are AMD-centric.

- Verdict:

Best AMD option for those working with AI and deep learning tasks but looking for a more affordable option compared to NVIDIA's high-end GPUs.

Cloud vs. Local GPU for Deep Learning

Feature | Cloud GPUs | Local GPUs |

Scalability | Easily scalable, add or remove GPU resources as needed. | Limited scalability, requires physical hardware for scaling. |

Cost | Pay-as-you-go pricing, cost-effective for short-term or project-based use. | High upfront cost for hardware, but no ongoing rental fees. |

Latency | Higher latency due to data transfer between cloud and user systems. | Low latency, real-time processing with direct access to GPU. |

Maintenance | Cloud providers handle hardware maintenance and upgrades. | User is responsible for hardware maintenance, cooling, and upgrades. |

Data Security | Data is stored and processed remotely, potential privacy concerns. | Full control over data, processed locally, more secure for sensitive information. |

Internet Dependency | Requires a stable internet connection for access. | Does not require an internet connection to operate. |

Flexibility | Flexible and accessible from anywhere. | Limited to physical location, but more control over data processing. |

Use Case | Ideal for large-scale or temporary deep learning tasks with fluctuating needs. | Perfect for high-demand, continuous deep learning tasks requiring real-time processing. |

Power Consumption | No direct concern for the user, but cloud services can be expensive long-term. | Higher power consumption and cooling requirements, but manageable. |

Ideal For | Businesses and researchers needing scalable, short-term GPU access. | Organizations that require high-performance, secure, and consistent deep learning. |

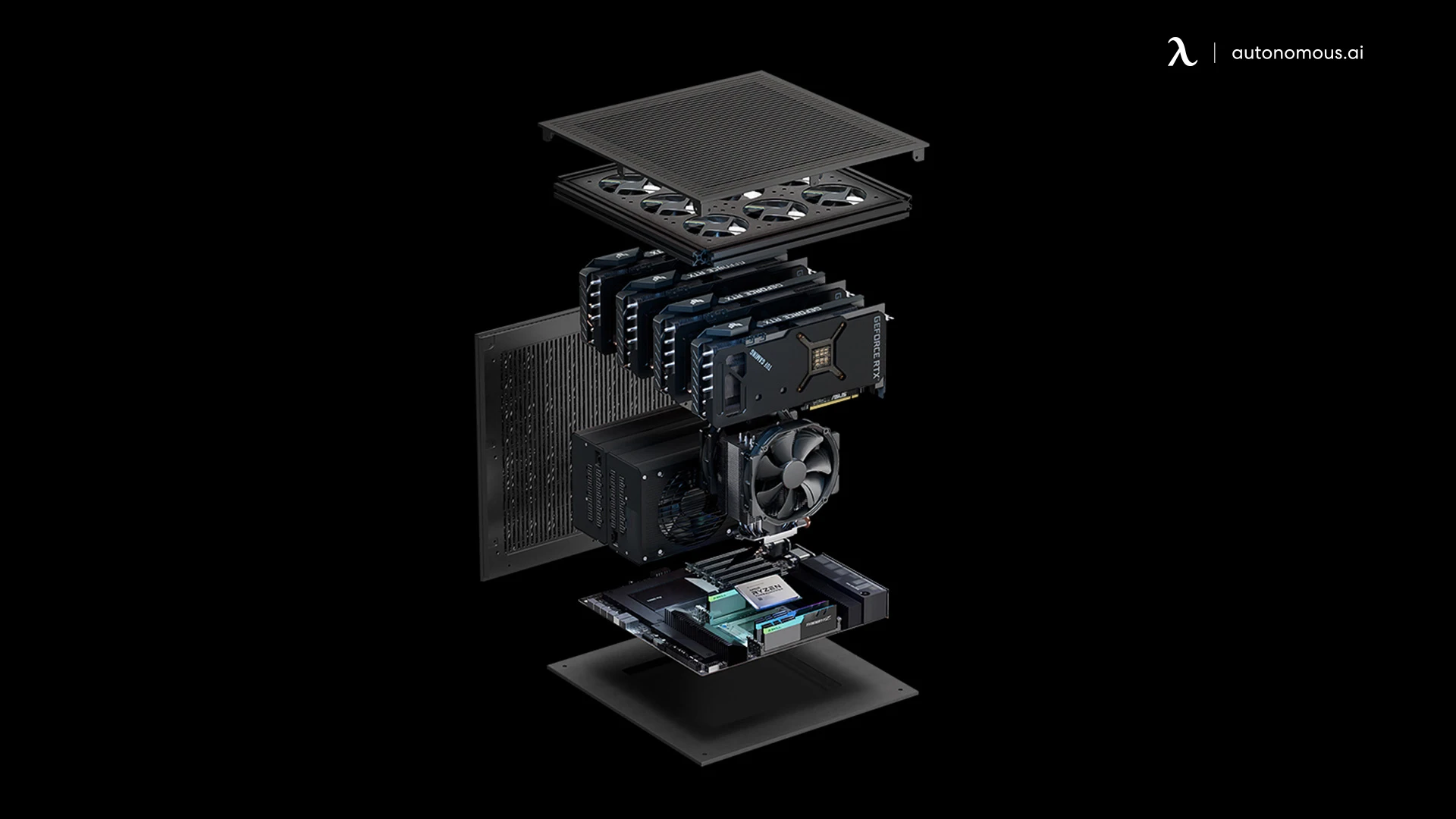

EdgeAI Computer: The Ideal Local GPU for Deep Learning

For businesses looking for a local solution that combines high performance, security, and cost-efficiency, the EdgeAI Computer is an ideal choice. Powered by NVIDIA RTX 4090D GPUs, it delivers powerful processing capabilities, making it suitable for demanding deep learning tasks such as model training, real-time video analysis, and predictive health monitoring. The EdgeAI Computer ensures that businesses can process large datasets and run complex models without relying on cloud infrastructure.

- AI Performance: With over a petaflop of AI performance, it handles demanding tasks like deep learning model training and data analysis.

- Scalability: Configurable with up to 8 GPUs, the EdgeAI Computer can scale as your AI workload grows.

- Data Privacy: All data is processed locally, ensuring complete control and security, with no data sent to the cloud.

- Cost-Efficiency: Save up to 96% compared to traditional cloud services by eliminating ongoing cloud fees.

For businesses and developers looking to explore the potential of Edge AI, this technology enables localized processing for faster decision-making and reduced latency. It's crucial to understand how Edge AI works compared to cloud-based models to make informed decisions. Additionally, consider the benefits of Edge AI vs Cloud AI to determine which approach better suits your organization's needs. Finally, explore practical Edge AI applications that are transforming industries like healthcare, autonomous vehicles, and smart cities by enabling real-time intelligence at the edge.

FAQs

What is the best GPU for deep learning?

The best GPU for deep learning depends on your specific needs. The NVIDIA H100 is the most advanced option, offering unparalleled performance for large-scale AI tasks. For cost-effective solutions, the NVIDIA RTX 4090 offers excellent performance for most deep learning applications without the high cost of the H100.

Why is a GPU better than a CPU for deep learning?

GPUs are designed for parallel processing, which allows them to handle multiple tasks simultaneously. Deep learning requires handling large datasets and performing complex calculations, making GPUs significantly faster than CPUs, which are designed for sequential tasks.

How many GPUs do I need for deep learning?

For small projects, one GPU can be sufficient. However, for larger projects or to speed up training times, using multiple GPUs in parallel can drastically reduce training time. High-end models like the NVIDIA A100 can handle these tasks with ease.

Can I use a GPU for deep learning in the cloud?

Yes, cloud providers like AWS, Google Cloud, and Microsoft Azure offer GPUs for rent. This is a good option if you don’t want to invest in physical hardware and need flexible, on-demand computing power for your deep learning tasks.

What’s the difference between the NVIDIA A100 and the RTX 4090 for deep learning?

The NVIDIA A100 is a data-center-grade GPU with specialized features for large-scale machine learning and AI workloads. The RTX 4090, while a powerful consumer-grade GPU, is better suited for gaming and smaller-scale deep learning applications. The A100 is optimized for high throughput and scalability, making it ideal for professional research or enterprise-level projects.

Is an AMD GPU good for deep learning?

While AMD GPUs have made significant improvements, particularly with their Instinct MI300 series, NVIDIA GPUs are still the industry standard for deep learning due to their superior support for deep learning frameworks and the CUDA ecosystem.

What GPU should I get for machine learning on a budget?

For those on a budget, the NVIDIA RTX 3060 or RTX 3070 offers excellent value, providing sufficient power for medium-scale machine learning tasks without breaking the bank. If you're on an even tighter budget, the GTX 1660 Ti can handle basic machine learning tasks.

Can I use my gaming GPU for deep learning?

Yes, gaming GPUs like the NVIDIA GeForce RTX 3080 or RTX 3070 can be used for deep learning. However, professional GPUs like the NVIDIA A100 or RTX 4090 offer better performance for large-scale training tasks due to more memory and optimized architecture for AI workloads.

What is the most powerful GPU for deep learning in 2025?

As of 2025, the NVIDIA H100 is considered one of the most powerful GPUs for deep learning. It is designed specifically for high-performance computing, making it ideal for training large neural networks and AI models.

Should I buy a single powerful GPU or multiple less powerful GPUs?

The choice depends on your budget and the scale of your project. A single high-end GPU like the NVIDIA RTX 4090 can handle most tasks efficiently. However, for larger, more complex models, using multiple mid-tier GPUs (like the RTX 3080 or RTX 3090) in parallel can significantly reduce training times.

.webp)

Conclusion

Selecting the right GPU for deep learning in 2025 is crucial for achieving optimal performance in AI projects. With powerful GPUs like the NVIDIA H100 and RTX 4090 dominating the market, businesses and researchers now have access to hardware that can handle large-scale AI workloads with ease. The decision between cloud-based GPUs and local processing GPUs, such as the EdgeAI Computer, ultimately depends on your project needs, budget, and scalability requirements.

For those starting out, mid-tier GPUs like the RTX 3060 or RTX 3070 can offer a great balance of performance and affordability. On the other hand, professionals working on complex deep learning models and large datasets will benefit from high-performance options like the A100 or H100.

No matter your budget or project scale, selecting the best GPU ensures faster model training, improved accuracy, and more efficient AI computations. Keep in mind the factors like CUDA cores, memory bandwidth, and compatibility with AI frameworks when making your choice.

In the ever-evolving world of AI, the right GPU is a game-changer, helping to unlock new possibilities and drive innovation across industries.

Spread the word

.svg)