GPU vs CPU for AI: Choosing the Right Hardware for AI Tasks

Table of Contents

In the world of AI, hardware choice can make or break performance, efficiency, and scalability. While both CPU (Central Processing Unit) and GPU (Graphics Processing Unit) are crucial, their respective strengths make them suitable for different aspects of AI tasks. This article explores the CPU vs GPU for AI debate, focusing on their roles in machine learning, deep learning, and AI training.

What is a CPU and GPU for AI?

- CPU (Central Processing Unit):

The CPU is the brain of your computer, designed for general-purpose tasks. It has fewer cores, but each one is highly optimized for single-threaded performance. CPUs handle tasks that require intricate, logical processing, making them ideal for simple machine learning tasks, or any application requiring high single-core performance.

- GPU (Graphics Processing Unit):

GPUs are designed specifically for parallel processing, which makes them ideal for computationally intensive tasks such as deep learning and AI model training. A GPU consists of thousands of smaller cores capable of handling many tasks simultaneously, making them superior for training large-scale AI models.

CPU vs GPU for AI: Key Differences

Feature | CPU | GPU |

Data Processing Location | Handles data sequentially, one task at a time | Handles many tasks in parallel simultaneously |

Latency | Higher latency, especially for large data sets | Low latency, real-time processing of large datasets |

Privacy | Data processed in-house, but depends on the task | Data might need to be sent to cloud for processing |

Computational Power | Limited by core count and speed | Powerful for large AI workloads due to parallelism |

Scalability | Limited scalability due to core constraints | Easily scalable for large datasets and complex models |

Cost | Generally lower upfront cost, but higher operational cost for large tasks | Higher upfront cost, but cost-efficient for large workloads over time |

Ideal Use | Best for general-purpose tasks and lightweight models | Best for deep learning, large-scale machine learning, and real-time AI tasks |

CPU vs GPU for Machine Learning

When it comes to machine learning tasks, GPUs tend to have the upper hand due to their parallel processing capabilities. Machine learning tasks like training a model on large datasets require immense computational power, and GPUs can drastically speed up this process. A GPU can handle multiple operations at once, reducing the time required for training compared to CPUs, which process data sequentially.

However, for simpler, less computationally intense tasks, CPUs still perform well. Tasks such as data preprocessing or smaller models that don’t require massive parallelization can be handled more efficiently by CPUs.

.webp)

CPU vs GPU for Deep Learning

Deep learning requires massive amounts of computation to process large neural networks, making GPUs the ideal choice. GPUs are engineered for parallel processing, allowing them to handle the enormous matrix operations involved in deep learning. CPUs, while versatile, cannot match the speed of GPUs for training deep learning models, particularly those requiring real-time feedback.

For example, training a convolutional neural network (CNN) or a recurrent neural network (RNN) requires processing tens of millions of parameters simultaneously, something that GPUs excel at. CPUs, on the other hand, are slower due to their reliance on sequential processing.

.webp)

AI Chip vs GPU: The Evolution of AI Hardware

In addition to CPUs and GPUs, AI-specific chips, such as TPUs (Tensor Processing Units) and FPGAs (Field Programmable Gate Arrays), are gaining popularity. These chips are specifically designed to accelerate AI tasks by offering faster computation, lower latency, and higher energy efficiency.

TPUs, for instance, are optimized for deep learning and are widely used in large-scale model training. FPGAs offer flexibility and are used in custom AI workloads. However, GPUs remain the most popular choice for a variety of AI tasks due to their general-purpose utility and high performance.

Choosing Between CPU and GPU for Your AI Needs

For Machine Learning: If your project involves simple models or small datasets, a CPU may suffice. However, if you’re working with large datasets or need faster training times, a GPU will significantly improve efficiency.

For Deep Learning: GPUs are the clear choice for deep learning applications due to their parallel processing capabilities, which dramatically speed up model training times.

Cost Consideration: While GPUs can be more expensive upfront, they offer long-term savings when scaling AI workloads. CPUs, while less expensive, may incur higher operational costs for large-scale tasks.

EdgeAI Computer: Revolutionizing AI with Local Processing

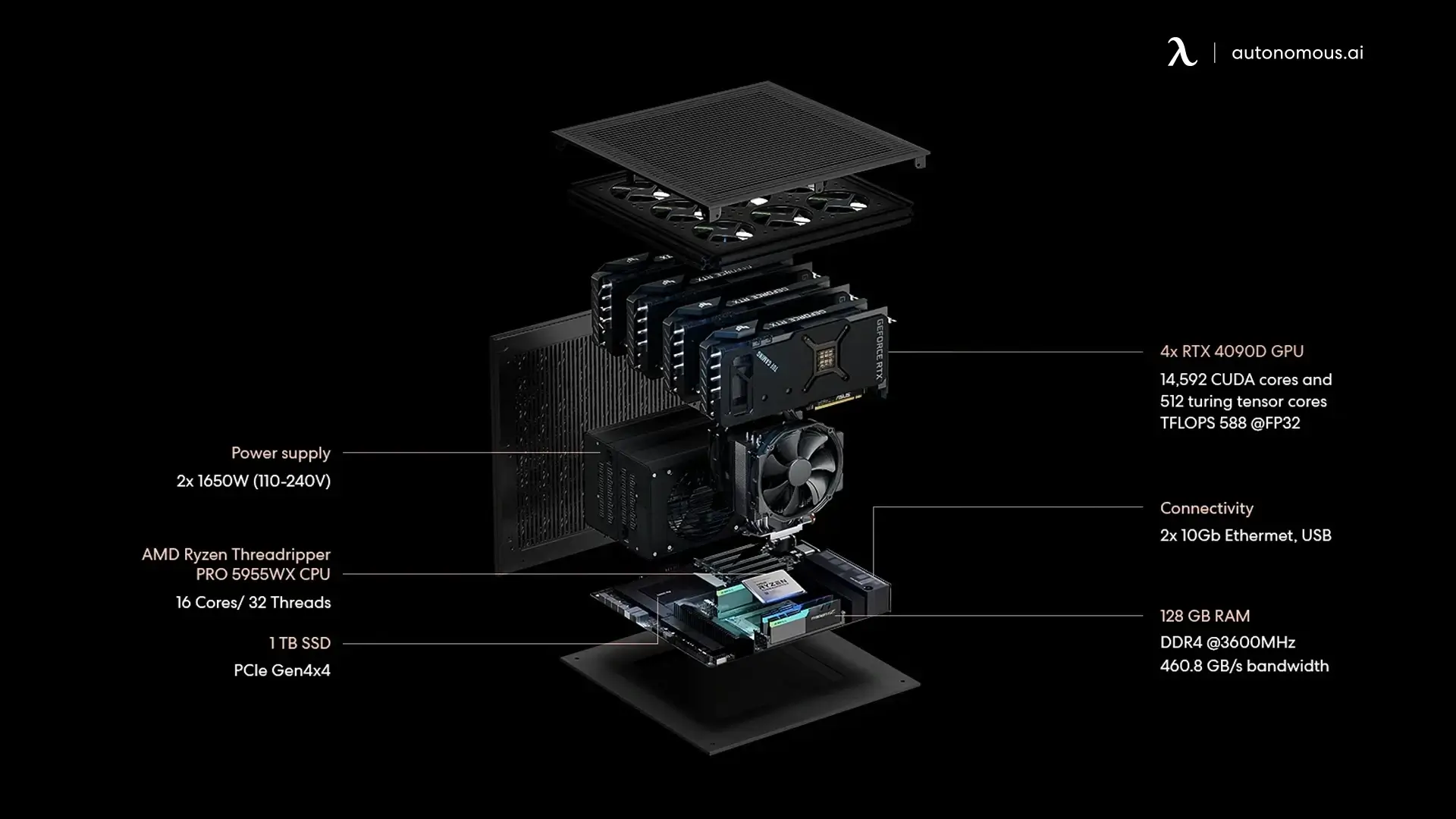

While CPUs and GPUs each have their strengths, EdgeAI Computer brings the best of both worlds. With its local processing capabilities and NVIDIA RTX 4090D GPUs, the EdgeAI Computer allows businesses to run AI models locally without relying on cloud infrastructure.

Key features of the EdgeAI Computer include:

- High Performance: Powered by NVIDIA RTX 4090D GPUs, the EdgeAI Computer delivers unmatched AI performance, ideal for demanding tasks such as AI model training, data analysis, and real-time decision-making.

- Scalability: Whether you're a solo developer or part of a large team, the EdgeAI Computer can be configured with up to 8 GPUs, allowing for massive AI workloads.

- 100% Privacy: All AI workloads are processed locally, ensuring full data privacy. No data is sent to the cloud, reducing the risk of data breaches.

- Cost-Efficiency: The EdgeAI Computer offers a 96% savings over traditional cloud services, with no ongoing cloud fees.

For a better understanding of AI chips vs GPUs, the EdgeAI Computer bridges the gap between both technologies by offering powerful local processing with high performance.

The AI PC is designed for those who need powerful computing locally, and an AI PC assistant is an ideal complement, enhancing workflows through AI-powered automation.

FAQs

Does AI use CPU or GPU?

AI systems can utilize both CPUs and GPUs. However, GPUs are generally preferred for tasks like machine learning and deep learning due to their parallel processing capabilities, which allow for faster computations compared to the sequential nature of CPUs.

Why is GPU better than CPU for deep learning?

GPUs are optimized for handling multiple operations simultaneously, which is essential for deep learning. Since deep learning tasks involve large amounts of data and complex computations, the ability to process many calculations in parallel makes GPUs far more efficient than CPUs for these tasks.

Why is GPU better than CPU for machine learning?

In machine learning, especially when training large models, GPUs offer significant advantages in speed and performance over CPUs. GPUs can handle the massive matrix multiplications and data parallelism required for training models, dramatically reducing the time required for model training compared to CPUs.

Why is GPU more powerful than CPU?

GPUs are designed to execute thousands of operations simultaneously, making them far more powerful than CPUs for tasks like AI training and data processing. While CPUs are optimized for single-threaded performance and general computing tasks, GPUs are specialized for high-throughput operations, enabling faster processing of AI workloads.

Can EdgeAI Computer replace cloud AI for businesses?

Yes, the EdgeAI Computer offers an efficient alternative to cloud-based AI. It allows businesses to run large AI models locally, cutting down on cloud costs while ensuring greater data security and privacy by processing everything on-site.

When should I use GPU over CPU for AI tasks?

GPUs should be used for tasks that require high computational power, such as deep learning and machine learning model training. If the task involves heavy computations or large datasets, GPUs will outperform CPUs in speed and efficiency.

What’s the main advantage of using GPU in AI over CPU?

The main advantage of using a GPU in AI is its ability to handle massive parallel computations, enabling much faster processing of AI models. This is essential for machine learning and deep learning tasks that involve large amounts of data and require real-time performance.

Conclusion

In the debate of CPU vs GPU for AI, both have essential roles in AI workloads. GPUs are the ideal choice for large-scale deep learning and machine learning tasks, offering superior computational power and efficiency. However, for businesses seeking to run powerful AI models locally, the EdgeAI Computer provides an optimal solution by offering high-performance GPU computing without cloud reliance. Whether you’re looking to scale AI models or protect sensitive data, the EdgeAI Computer is an excellent choice for businesses aiming to stay ahead in AI.

Spread the word

.svg)