Table of Contents

As machine learning continues to revolutionize industries, the need for high-performance computing hardware becomes increasingly vital. At the heart of this transformation is the GPU (Graphics Processing Unit), which accelerates the complex computations required for training machine learning models. Unlike CPUs, which handle sequential tasks, GPUs are optimized for parallel processing, enabling faster processing of vast amounts of data. This article will explore the best GPUs for machine learning in 2025, including top options like NVIDIA RTX 4090, A100, and others, to help businesses, researchers, and enthusiasts make informed decisions. Whether you're a startup, an AI professional, or a developer looking to optimize your workflow, this guide will provide the information you need to choose the right GPU for your machine learning projects.

1. What Is a GPU and Why Is It Important for Machine Learning?

1.1. Basic Definition of GPU

A GPU (Graphics Processing Unit) is a specialized piece of hardware designed to accelerate graphics rendering, but its capabilities go far beyond that. Initially, GPUs were created to manage and accelerate rendering images for displays, but over the years, they have evolved to handle more complex tasks, particularly those related to parallel processing and computation.

Unlike a CPU (Central Processing Unit), which is designed to handle a small number of tasks at high speed, a GPU is optimized for parallelism. This means that while a CPU might perform one task at a time, a GPU can execute many tasks simultaneously. This parallel architecture is what makes GPUs particularly effective for machine learning (ML), where massive amounts of data need to be processed simultaneously.

While CPUs are still essential for tasks requiring sequential processing, such as handling logic or running operating systems, GPUs are the go-to solution for machine learning tasks. Their ability to handle parallel processing allows them to accelerate tasks that would otherwise take far too long on traditional CPU-based systems.

1.2. GPU’s Role in Machine Learning

Machine learning involves the creation and training of algorithms that can analyze and predict data. These models often require processing large datasets with billions of calculations. This is where GPUs shine. Machine learning tasks, particularly deep learning, benefit from the parallel nature of GPUs, which allows them to perform many computations simultaneously, speeding up the training process significantly.

For instance, when training a deep learning model, especially with large datasets, a GPU can distribute the task across thousands of cores, making computations faster. This is crucial in machine learning, as training models, especially deep neural networks (DNNs), can take a long time on a CPU-based system. GPUs, with their vast number of cores, can reduce the training time from weeks or days to just hours or minutes.

One example of GPU acceleration in machine learning is during the training phase of a deep learning model. Training involves calculating the gradients (the error) of each parameter in the network, which is essentially a set of calculations done in parallel. A GPU is ideal for this task, enabling faster processing and allowing for larger models and datasets to be trained more efficiently.

1.3. Key Features: CUDA & Tensor Cores

When discussing GPUs in the context of machine learning, there are a few specialized features that make GPUs even more powerful and efficient for these tasks. These features include CUDA cores and Tensor cores.

- CUDA Cores:

Developed by NVIDIA, CUDA (Compute Unified Device Architecture) cores are the building blocks of the parallel processing power of a GPU. Each CUDA core is responsible for handling a small portion of a large computation, making them ideal for tasks like matrix multiplication, which is a common operation in machine learning. The more CUDA cores a GPU has, the better it is at handling multiple tasks simultaneously, which is key for the massive datasets in machine learning.

- Tensor Cores:

Tensor cores are a type of core designed specifically for deep learning tasks. They were introduced by NVIDIA with the Volta architecture and have since been an essential component in deep learning workloads. Tensor cores accelerate the most common operations used in machine learning, particularly those related to matrix multiplications, which are vital for training deep learning models. With Tensor cores, GPUs can execute calculations much more efficiently, which significantly speeds up the training process.

Tensor cores are particularly valuable when training neural networks, such as those used for image recognition, natural language processing, or speech recognition. They are built to handle the vast amounts of data required for these tasks, boosting efficiency and lowering the time needed to train large models.

2. Top GPUs for Machine Learning

Choosing the best GPU for machine learning is crucial for optimizing your AI projects, training models, and speeding up inference tasks. With the rapid advancements in GPU technology, several powerful models are available to cater to different needs, from entry-level developers to high-performance computing tasks. Below are some of the best GPUs for machine learning, with details on their features and advantages for AI and machine learning workloads.

2.1. NVIDIA RTX 4090

- VRAM: 24 GB GDDR6X

- CUDA Cores: 16,384

- Tensor Cores: 512

- Memory Bandwidth: 1.008 TB/s

- Best For: High-end machine learning tasks, large datasets, and AI research.

The NVIDIA RTX 4090 is one of the most powerful GPUs available in the market, delivering outstanding performance for both machine learning and deep learning applications. With its 24 GB VRAM, high memory bandwidth, and powerful CUDA and Tensor cores, this GPU is ideal for handling large-scale AI models and datasets. It excels in AI model training, image processing, and natural language processing tasks.

Why It’s Good for ML: The RTX 4090 provides cutting-edge speed for training large neural networks and processing real-time data, making it a top choice for AI research labs, large enterprises, and businesses working with massive datasets.

2.2. NVIDIA A100 Tensor Core GPU

- VRAM: 40/80 GB HBM2e

- CUDA Cores: 6912

- Tensor Cores: 432

- Memory Bandwidth: 1555 GB/s

- Best For: Large-scale training, deep learning models, and high-performance computing.

The NVIDIA A100 is designed specifically for data centers, providing extreme performance in AI model training and big data analytics. It is a staple in many enterprise-level machine learning environments, offering the power necessary for deep learning tasks like training complex neural networks and running high-performance AI applications.

Why It’s Good for ML: The A100 is optimized for large-scale training tasks, capable of handling multi-instance GPU (MIG) support, enabling users to run multiple AI workloads on a single GPU simultaneously. Its massive memory bandwidth and Tensor Core performance make it one of the best GPUs for machine learning.

2.3. NVIDIA H100 Tensor Core GPU

- VRAM: 80 GB HBM3

- CUDA Cores: 16896

- Tensor Cores: 512

- Memory Bandwidth: 3.35 TB/s

- Best For: High-performance AI workloads and large language models.

The NVIDIA H100 is one of the latest GPUs to hit the market, offering next-level performance for AI applications, especially large language models (LLMs). The H100 is designed for the most demanding machine learning workloads, providing outstanding speed for training AI models and inference tasks. This GPU leverages NVIDIA’s Hopper architecture to handle massive datasets with lightning speed.

Why It’s Good for ML: Its vast VRAM and high memory bandwidth make it perfect for deep learning and transformer models in AI research, especially in areas such as NLP and image recognition.

2.4. NVIDIA RTX 6000 Ada Generation

- VRAM: 48 GB GDDR6

- CUDA Cores: 18,176

- Tensor Cores: 576

- Memory Bandwidth: 1.008 TB/s

- Best For: AI research, 3D rendering, and high-performance machine learning.

The RTX 6000 Ada Generation is a powerhouse for AI, capable of handling a wide range of AI tasks, including machine learning, deep learning, and real-time graphics rendering. This GPU offers a solid memory capacity and performance, making it an ideal choice for those who need both high-end computational power and reliable graphics performance.

Why It’s Good for ML: The RTX 6000 is an excellent GPU for AI researchers who need to run data-intensive models, with the added benefit of Tensor cores for optimized machine learning tasks. It also supports NVIDIA’s ecosystem, making it compatible with popular machine learning frameworks like TensorFlow and PyTorch.

2.5. AMD Radeon Instinct MI300X

- VRAM: 192 GB HBM3

- CUDA Cores: N/A (AMD has a different architecture)

- Tensor Cores: N/A

- Memory Bandwidth: 5.3 TB/s

- Best For: High-performance computing (HPC) and AI research.

The AMD Radeon Instinct MI300X is an advanced GPU designed for machine learning, AI research, and high-performance computing (HPC). It provides impressive memory bandwidth and a large memory capacity of 192 GB, which is essential for processing large AI datasets.

Why It’s Good for ML: While NVIDIA dominates the AI space, AMD’s Radeon Instinct MI300X offers strong performance for machine learning tasks, especially in enterprise environments requiring massive computational power. It’s ideal for large-scale AI and HPC workloads.

2.6. NVIDIA RTX 3080/3070

- VRAM: 10 GB/12 GB GDDR6X

- CUDA Cores: 8704/5888

- Tensor Cores: 272/184

- Memory Bandwidth: 760 GB/s

- Best For: Mid-range machine learning tasks and small teams.

The RTX 3080/3070 series offers a good balance of price and performance for machine learning tasks. While not as powerful as the RTX 4090 or A100, these GPUs are still more than capable of handling machine learning workloads for small teams or individual developers.

Why It’s Good for ML: These GPUs are ideal for entry-level AI model training, data analysis, and machine learning research, providing excellent performance at a more affordable price. They are great for individuals and smaller teams who don’t require enterprise-level GPUs but still need solid performance.

2.7. NVIDIA RTX 3060

- VRAM: 12 GB GDDR6

- CUDA Cores: 3584

- Tensor Cores: 112

- Memory Bandwidth: 360 GB/s

- Best For: Entry-level machine learning tasks, smaller projects, and learning AI.

The NVIDIA RTX 3060 offers impressive performance for entry-level machine learning projects. With 12 GB of VRAM and decent CUDA cores, it’s a good option for developers just getting started or for small-scale models that don’t require the extreme power of higher-end GPUs. The RTX 3060 provides solid support for frameworks like TensorFlow and PyTorch, making it ideal for learning and experimenting with machine learning models.

Why It’s Good for ML: The RTX 3060 offers a great price-to-performance ratio, delivering enough power for entry-level data science projects, image recognition, and basic deep learning models without breaking the bank. It’s perfect for learners, hobbyists, and smaller businesses with budget constraints.

2.8. GTX 1660 Ti

- VRAM: 6 GB GDDR6

- CUDA Cores: 1536

- Tensor Cores: N/A

- Memory Bandwidth: 288 GB/s

- Best For: Entry-level machine learning for beginners or hobbyists.

The GTX 1660 Ti is an affordable option for anyone just starting with machine learning. While it doesn’t have Tensor cores, its CUDA cores still provide parallel processing, making it a viable option for simple machine learning tasks, especially for developers learning the ropes. It’s a cost-effective GPU for small datasets and low-level training.

Why It’s Good for ML: The GTX 1660 Ti is a budget-friendly GPU that can help beginners practice machine learning techniques like classification, regression models, and basic neural networks. It’s ideal for learning and experimenting without making a large investment in high-end GPUs.

3. Cloud vs. Local GPU for Machine Learning

When deciding between cloud and local GPUs for machine learning, businesses must consider factors like cost, performance, and data privacy. Below is a quick comparison of cloud GPUs versus local GPUs.

Feature | Cloud GPUs | Local GPUs |

Cost | Pay-as-you-go; suitable for flexible budgets | High initial cost, but no ongoing fees |

Scalability | Easily scalable for large datasets or spikes in demand | Limited by local hardware, but scalable with additional GPUs |

Latency | Higher due to data transfer to the cloud | Low latency; real-time processing on local devices |

Data Privacy | Lower privacy; data is sent to the cloud | 100% privacy; data stays local on the device |

Performance | Varies depending on cloud provider | High performance with NVIDIA RTX 4090D GPUs |

Maintenance | Managed by the cloud provider | Requires local maintenance but offers full control |

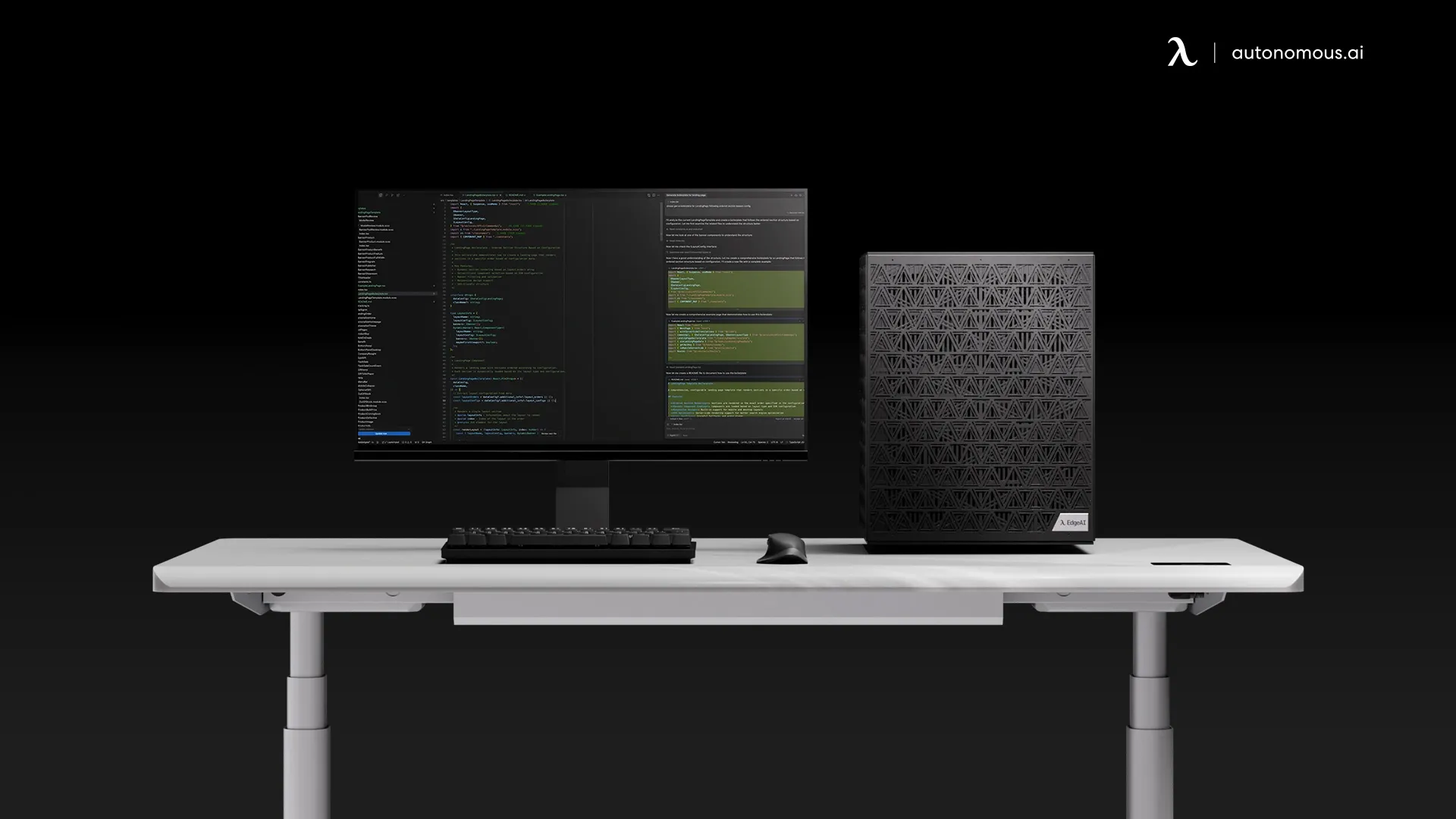

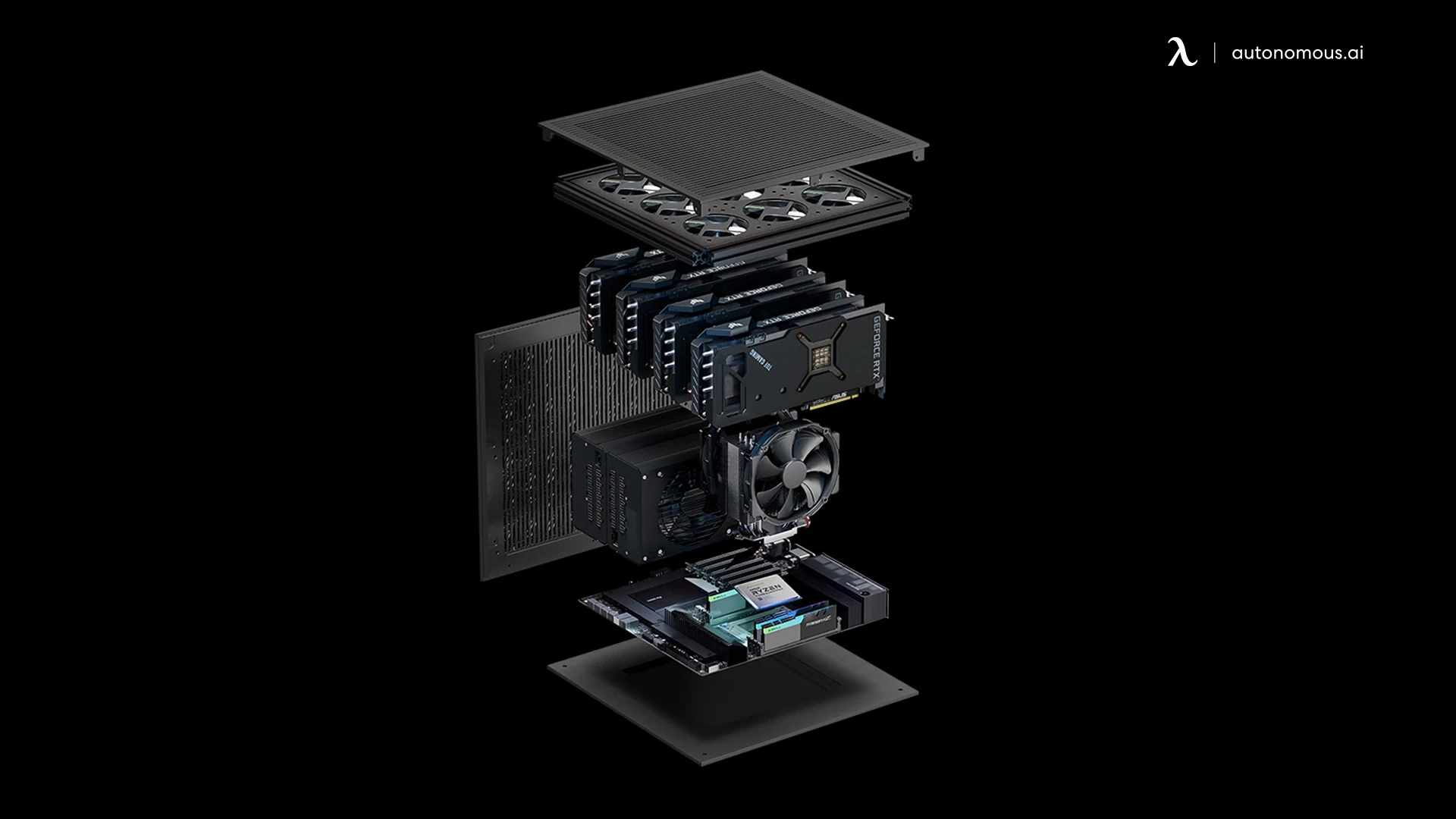

4. EdgeAI Computer: The Ideal Local GPU for Machine Learning

For businesses requiring the best of both worlds, the EdgeAI Computer provides top-tier local GPU performance with the added benefits of privacy, scalability, and cost-efficiency. Powered by NVIDIA RTX 4090D GPUs, the EdgeAI Computer allows businesses to process large datasets, run complex AI models, and make real-time decisions—all without relying on cloud infrastructure.

If you're curious about how local AI computing differs from traditional methods, understanding what an AI PC is can offer valuable insights. Additionally, exploring AI PC assistants can help you enhance your productivity with intelligent desktop assistants.

For those interested in local data processing, what Edge AI is can provide a deeper understanding of this revolutionary technology. Lastly, you can discover Edge AI applications in industries like healthcare, manufacturing, and autonomous vehicles, where real-time decision-making is critical.

5. FAQs

What is the best GPU for machine learning?

The best GPU for machine learning depends on your specific needs. For high-end applications, the NVIDIA A100 or NVIDIA H100 is excellent for large-scale training and inference tasks. For more budget-friendly options, NVIDIA RTX 3090 and RTX 4090 are great choices, offering powerful performance at a relatively lower cost.

Why is GPU better than CPU for machine learning?

GPUs excel over CPUs in machine learning because they are designed for parallel processing, allowing them to handle multiple tasks simultaneously. This parallelism enables faster computations, especially for tasks like training deep learning models, which require processing large amounts of data. The GPU vs CPU for AI comparison highlights how GPUs outperform CPUs in these scenarios.

How does GPU accelerate deep learning?

GPUs accelerate deep learning by providing specialized hardware that performs parallel processing. With thousands of cores dedicated to processing data simultaneously, GPUs can train deep learning models much faster than CPUs, reducing the time required for model training from weeks to hours.

Can I use my laptop's GPU for machine learning?

Yes, you can use a laptop's GPU for machine learning, but the performance may be limited compared to high-end desktop GPUs. Many laptops come with NVIDIA GPUs (e.g., RTX 3070 or RTX 3080), which are suitable for small-scale machine learning tasks. However, for large datasets and complex models, you might want to consider a dedicated desktop GPU or cloud GPU services.

How much VRAM do I need for machine learning?

The amount of VRAM you need depends on the complexity of your models and datasets. For small to medium-sized models, 8GB to 12GB of VRAM (e.g., RTX 3070) should suffice. However, for larger models, particularly those involving deep learning and large datasets, GPUs with 24GB or more VRAM, such as the RTX 4090 or A100, are recommended.

What is the role of CUDA cores in machine learning?

CUDA cores are the processing units within a GPU that handle parallel tasks. More CUDA cores mean the GPU can process more operations simultaneously, significantly speeding up machine learning tasks like training neural networks and running inference on large datasets.

Should I use cloud or local GPUs for machine learning?

For large-scale tasks or if you prefer not to invest in physical hardware, cloud GPUs are a great option. Providers like AWS, Google Cloud, and Azure offer powerful GPUs on-demand. However, if you need continuous, high-performance processing with better data privacy, investing in a local GPU like the EdgeAI Computer may be more cost-effective in the long run.

What’s the difference between Tensor cores and CUDA cores?

Tensor cores are specialized hardware units designed for matrix multiplications and other calculations required for deep learning. These cores significantly accelerate machine learning operations, particularly those involving deep neural networks. CUDA cores, on the other hand, are general-purpose cores designed for parallel processing across a wide range of tasks.

Can I run machine learning models without a GPU?

It is possible to run machine learning models without a GPU, but the training process will be much slower. CPUs are not optimized for the massive parallel processing that machine learning tasks require, meaning training times can stretch into weeks. A GPU is highly recommended for any serious machine learning work, especially for deep learning.

Which GPU is best for deep learning?

In 2025, the NVIDIA H100 and A100 are the top choices for deep learning due to their exceptional processing power, memory bandwidth, and specialized features like Tensor cores. For more budget-friendly options, the NVIDIA RTX 4090 and RTX 6000 Ada Generation offer great value for high-performance tasks.

How do I optimize my GPU for machine learning tasks?

To optimize your GPU for machine learning:

- Ensure that you’re using GPU-optimized libraries like TensorFlow GPU or PyTorch.

- Enable mixed-precision training to speed up training without sacrificing too much accuracy.

- Regularly monitor GPU usage to ensure you're utilizing its full potential using tools like nvidia-smi.

6. Conclusion

Selecting the right GPU for machine learning can significantly impact the efficiency and success of your AI projects. From powerful NVIDIA A100 GPUs for large-scale models to more budget-friendly options like the RTX 3070, there are a variety of GPUs available for different use cases. For businesses or developers looking for high-performance, cost-effective solutions with added privacy and scalability, the EdgeAI Computer offers a fantastic choice. By harnessing the power of local AI processing, the EdgeAI Computer ensures businesses can execute demanding tasks like real-time video analysis and predictive health monitoring without relying on cloud infrastructure. Ultimately, the best GPU depends on your project's needs, whether you're focused on small-scale models or large enterprise-level AI workloads.

Spread the word

.svg)