Building an Efficient EdgeAI Server: A Guide to Dual-GPU Setups

Table of Contents

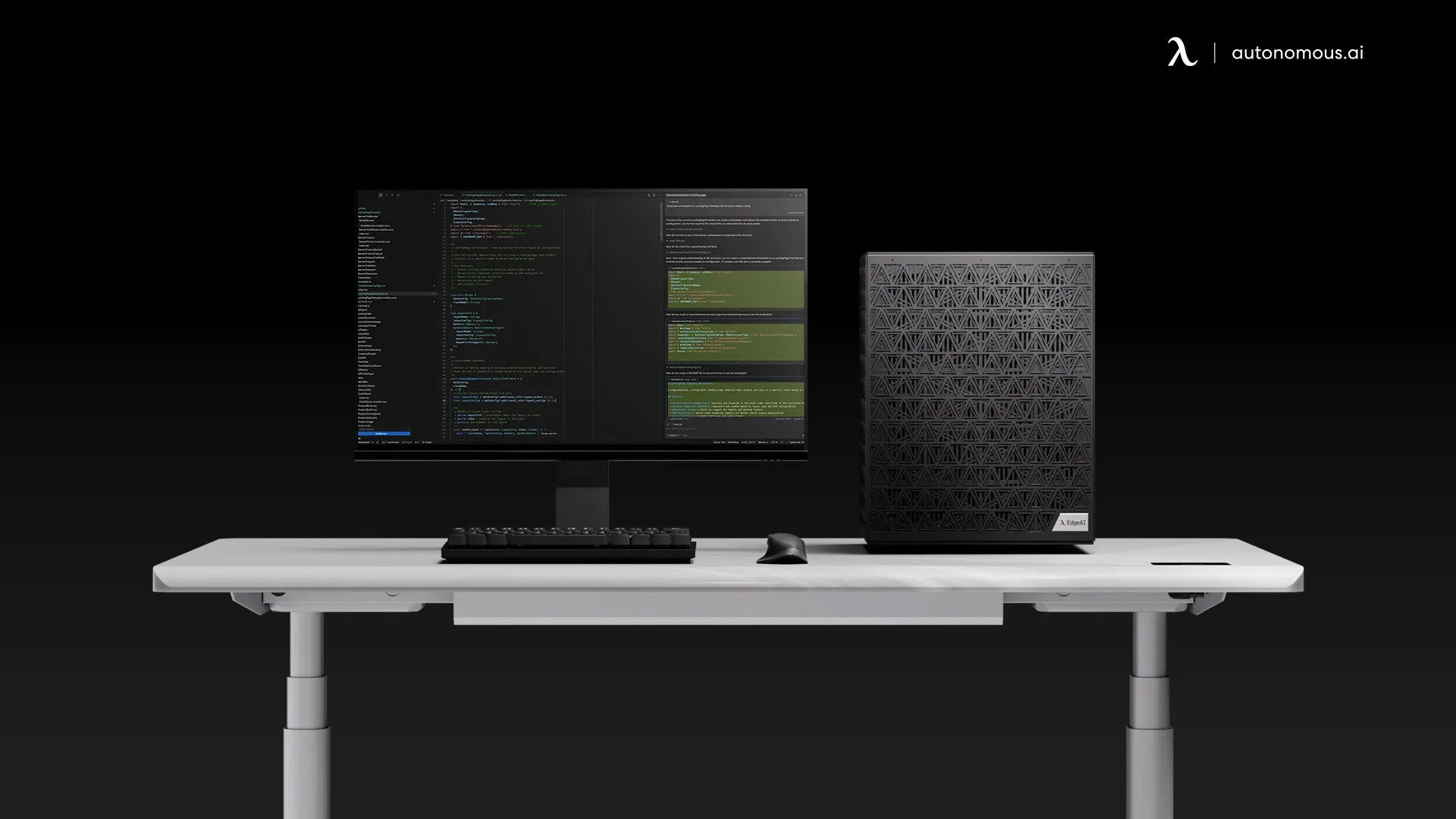

In the world of AI, the future isn't just about massive cloud data centers; it's about pushing computational power to the edge. For a growing number of developers and researchers, running complex models for inference and training on local machines is no longer a luxury—it's a strategic advantage.

Getting your own multi-GPU EdgeAI server isn't just a fun project; it's a smart investment. This article dives into why a purpose-built EdgeAI machine can outperform traditional cloud solutions and provides a step-by-step guide to building a powerful, dual-GPU system.

Why Go Local?

When faced with the need for serious GPU power, many people's first thought is to rent it from the cloud. However, setting up a local multi-GPU machine offers significant benefits that cloud-based services often can't match.

- Cost-Effectiveness: While the upfront cost of hardware can be high, the long-term operational cost of an on-premise machine is significantly lower than paying for continuous GPU time in the cloud, especially for ongoing, intensive workloads.

- Total Control: You have complete control over the hardware and software stack, allowing you to fine-tune every component for your specific tasks without vendor limitations.

- Low Latency: Processing data locally drastically reduces latency, which is critical for real-time applications like robotics, industrial automation, or live video analysis.

- Data Security: Sensitive data remains on-site, enhancing privacy and ensuring compliance with data protection regulations.

The 2xGPUs Project: A Build Guide

This project is about creating a high-performance machine that's both manageable and stable. To achieve a robust system, we’ll focus on the core components.

1. Motherboard

The motherboard is the heart of the system, determining its scalability and performance. The ideal choice is a board that supports at least two full-bandwidth PCIe x16 slots (or x8/x8) to ensure maximum throughput for the GPUs.

- Recommendation: Motherboards using the Intel Z690/Z790 chipset (for 12th/13th/14th Gen CPUs) or AMD X670E/X670 chipset (for Ryzen 7000 series CPUs) are excellent options.

2. Central Processing Unit (CPU)

The CPU plays a crucial role in preparing data and managing tasks for the GPUs. A powerful CPU ensures that your GPUs aren't bottlenecked by the rest of the system.

- Recommendation: An Intel Core i7-13700K or AMD Ryzen 7 7700X offers a great balance of performance and price.

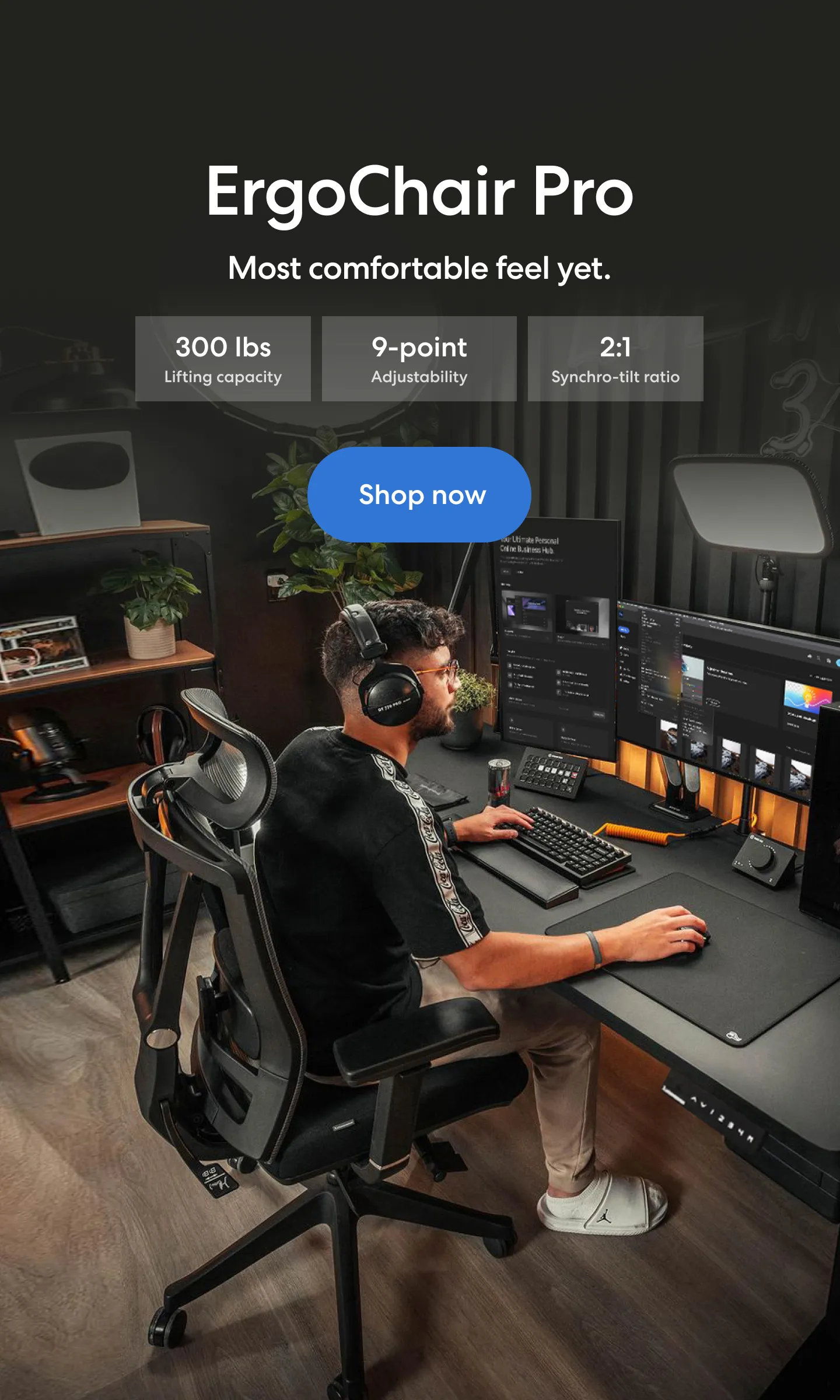

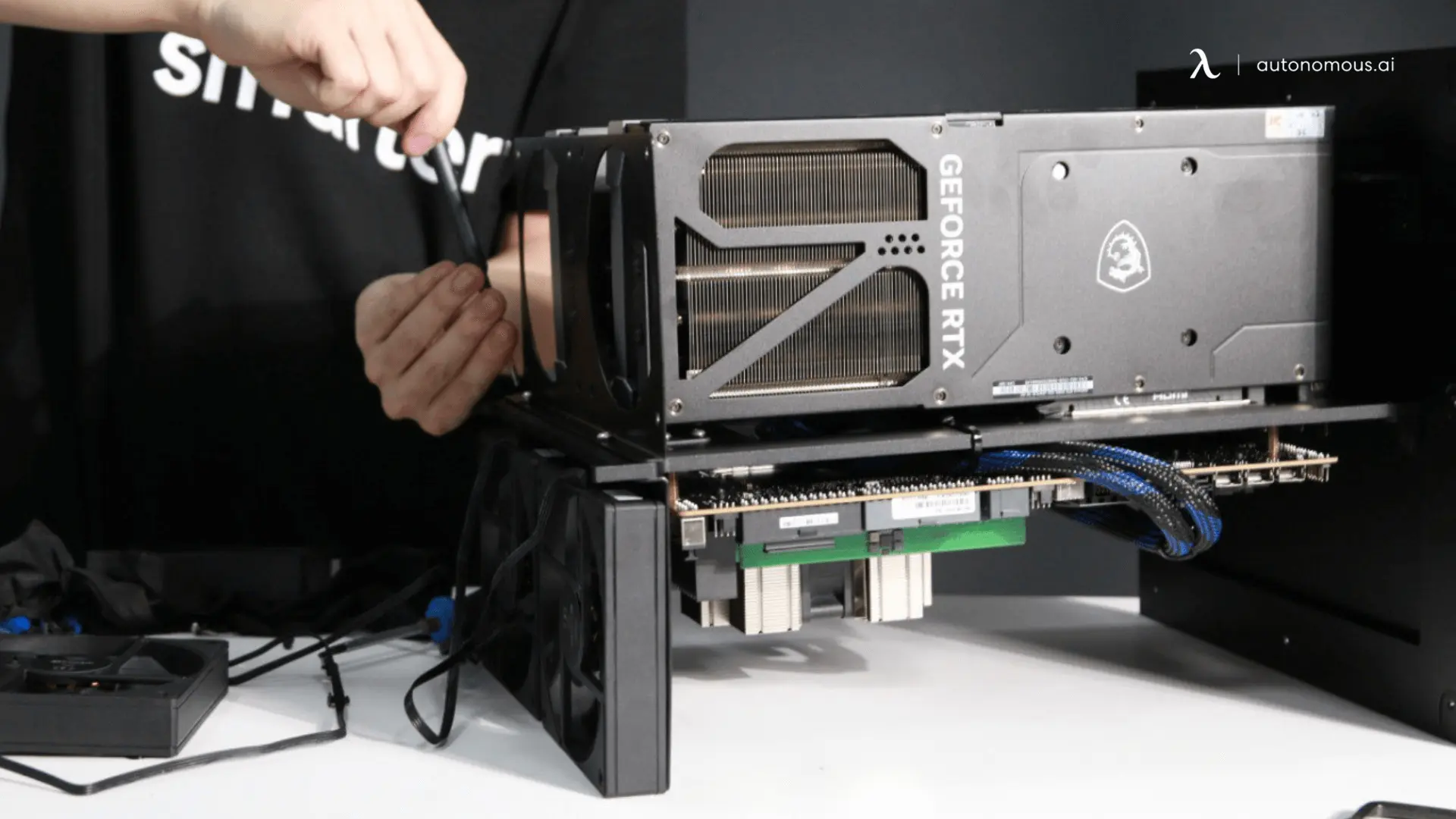

3. Graphics Processing Unit (GPU)

This is the core of your EdgeAI server. Using two powerful GPUs delivers superior performance, especially when working with large models.

- Recommendation: The NVIDIA RTX 5090, with its cutting-edge architecture and large VRAM capacity, is the optimal choice. However, two RTX 4090 cards can provide comparable performance at a potentially more accessible price point.

4. RAM and Storage

- RAM: 64GB is the recommended minimum, especially when handling large datasets. Opting for DDR5 RAM provides faster data transfer speeds.

- Storage: A fast NVMe SSD (Gen4 or Gen5) is essential to minimize data loading times.

5. Power Supply (PSU) and Cooling System

With two high-end GPUs like the RTX 5090, the system's power consumption will be substantial, requiring a high-wattage, high-quality PSU.

- PSU: A 1200W to 1600W PSU with an 80 Plus Platinum rating is necessary for stability and efficiency.

- Cooling: A liquid cooling system (AIO liquid cooler) for the CPU and high-performance case fans are critical for maintaining stable temperatures and allowing the GPUs to perform at their best.

Software and Setup

After assembling the hardware, the next step is to get the software stack configured to unlock the system's full potential.

1. Operating System:

A Linux distribution like Ubuntu 22.04 LTS is the best choice due to its strong community support for AI development.

2. Install Drivers and Toolkits:

- Install the latest NVIDIA drivers for your GPUs.

- Install the CUDA Toolkit, NVIDIA's parallel computing platform, which is required to run AI tasks.

- Install cuDNN (CUDA Deep Neural Network library) to optimize deep learning operations.

3. Set up Libraries:

Using Python, install key AI libraries like PyTorch and TensorFlow, as well as model-specific libraries like transformers or diffusers.

Conclusion

Building a multi-GPU EdgeAI server is a strategic decision for anyone seeking unparalleled control, performance, and cost efficiency. A system that is correctly designed and optimized is not just a powerful tool—it's a robust foundation for taking your AI projects from research to real-world application.

For detailed component lists and setup instructions, you can refer to the official 2xGPUs GitHub repository.

If you want to skip the build, you can find a setup that fit your needs here: https://www.autonomous.ai/edgeai

Spread the word

.svg)