Why On-Premise AI is the Future, Powered by EdgeAI and Ollama

Table of Contents

In the fast-paced world of artificial intelligence, it's easy to get swept up in the grand narratives of cloud computing. For years, the mantra has been clear: if you want to build and deploy cutting-edge AI, compelling large language models (LLMs), the cloud is your only option. It's where the immense computing power resides, where scalability seems limitless, and where groundbreaking models often emerge.

But what if there was another way? What if you could harness that same AI power, but with greater control, enhanced privacy, and significant cost savings, right there in your own office or data center?

At Autonomous, we believe the future of AI is a hybrid one, and that's why we’ve developed the EdgeAI device. This powerful on-premise GPU workstation can be set up with pre-installed Ollama/vLLM/llama. cpp, the leading open-source framework for running LLMs locally. This approach isn't just about a new product; it's a pivotal step towards democratizing access to advanced AI, shifting the paradigm from purely cloud-dependent models to powerful, on-premise solutions.

What Is Edge AI Computing and How Does It Work?

At its core, Edge AI computing refers to the processing of AI tasks directly on the device where the data is collected, rather than sending that data to a centralized cloud server. Think of it as a smart device that can make decisions in real-time without needing an internet connection.

The way Edge AI works is by bringing the computational power closer to the data source. Instead of data traveling from your device, across the internet, to a distant data center for processing, the AI model runs on the Autonomous’ EdgeAI device itself. This is made possible by its powerful on-premise GPU, which Ollama serves as the software framework that handles the complex calculations required by AI models. This paradigm shift offers several critical advantages:

1. Speed

Eliminating the round-trip to the cloud drastically reduces latency, enabling instant responses for applications like autonomous vehicles, industrial automation, and real-time security systems.

2. Privacy and Security

Data is processed and stored locally, minimizing the risk of a security breach and ensuring compliance with strict data regulations. Sensitive information never leaves the premises. For industries handling confidential information—such as finance, healthcare, and legal—running AI on-premise ensures that sensitive data never leaves a secure, controlled environment. This is crucial for maintaining customer trust and meeting regulatory requirements.

3. Cost-Effectiveness

Over the long term, running models on-premise can significantly lower costs by reducing reliance on expensive cloud computing resources and data transfer fees. Companies gain predictable, scalable control over their AI infrastructure.

4. Reliability

Edge AI applications can function seamlessly even in environments with limited or no internet connectivity.

5. Innovation with Greater Freedom

Developers can experiment, fine-tune, and deploy custom models without the fear of accumulating massive cloud bills. This encourages rapid prototyping and innovation, allowing companies to tailor AI to their unique business needs.

EdgeAI is a plug-and-play PC with Ollama pre-installed, allowing you to start managing AI models immediately.

EdgeAI is a plug-and-play PC with Ollama pre-installed, allowing you to start managing AI models immediately.

This technology is the driving force behind smart factories, intelligent retail spaces, and even next-generation personal devices. It’s about bringing the power of AI closer to the source of the data.

How to Run AI Locally

Running complex AI models locally has been a challenge, but the rise of user-friendly frameworks like Ollama has changed the game. Ollama simplifies the entire process, handling the heavy lifting of model quantization to make large models smaller and faster. The EdgeAI device comes with everything you need for a straightforward setup:

1. Plug and Play:

Simply plug in your EdgeAI device. It comes with a Linux-based operating system and pre-installed software, ready to go.

2. Access Ollama:

The pre-installed Ollama framework allows you to start managing AI models immediately. You can access it via the command line or through its API.

3. Pull Your Desired Model:

Open a terminal and use the simple ollama pull command to download a model from the Ollama library. For example, to download the gpt-oss-20B model, you would run: ollama pull gpt-oss-20B.

4. Run Your Model:

Once the download is complete, you can start interacting with the model. Just run ollama run gpt-oss-20B to start a chat session directly in your terminal, or use a programming language like Python to integrate the model into your application.

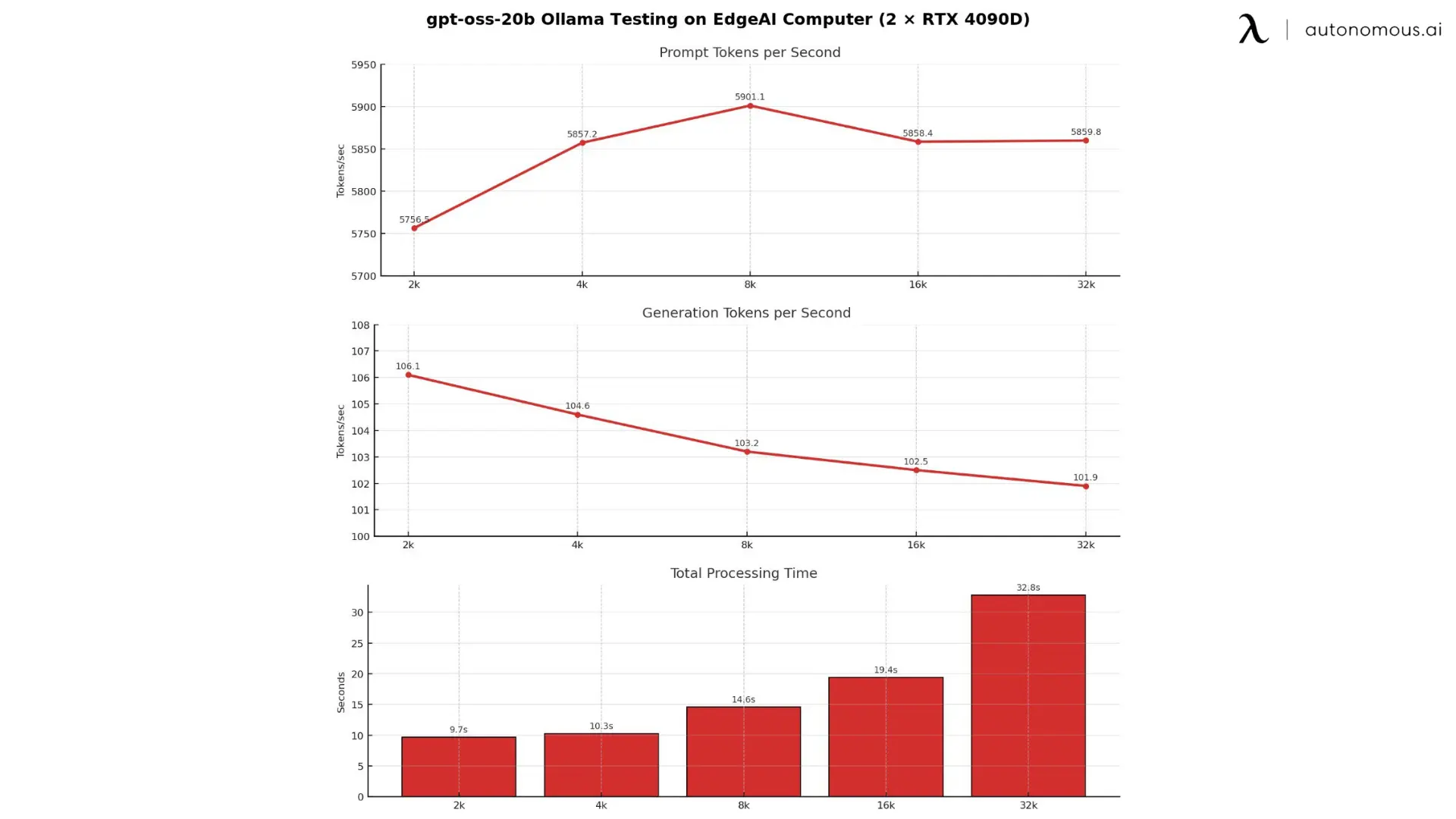

With Ollama pre-installed, EdgeAI brings a smooth experience to developers, knowing their compute resources are dedicated and their data is secure. (The result of running gpt-oss-20b with Ollama on EdgeAI)

With Ollama pre-installed, EdgeAI brings a smooth experience to developers, knowing their compute resources are dedicated and their data is secure. (The result of running gpt-oss-20b with Ollama on EdgeAI)

This seamless process empowers developers to iterate faster, knowing their compute resources are dedicated and their data is secure. It allows enterprises to explore new AI applications that were previously out of reach due to privacy concerns or prohibitive cloud costs.

Join the Revolution: Your AI, Your Way

The availability of devices like EdgeAI, combined with powerful, user-friendly frameworks like Ollama,vLLM, and llama.cpp marks a significant shift towards a more decentralized and democratized AI landscape. While the cloud will undoubtedly remain crucial for certain aspects of AI (like massive model training or global-scale deployments), the ability to bring powerful AI capabilities on-premise offers a vital and increasingly attractive alternative.

This is a future where companies can strategically choose where their AI workloads reside, balancing the benefits of cloud scalability with the unparalleled control, privacy, and cost-effectiveness of local computer. It's a future where innovation isn't constrained by recurring bills or data sovereignty concerns, but rather unleashed by accessible, high-performance, and secure AI infrastructure.

Ready to take control of your AI? Explore how EdgeAI can transform your AI strategy and deliver significant savings.

.webp)

Spread the word

.svg)

.webp)

.webp)