.webp)

The Cloud Paradox: Why the AWS Outage of '25 Demands a Better Solution than Cloud

Table of Contents

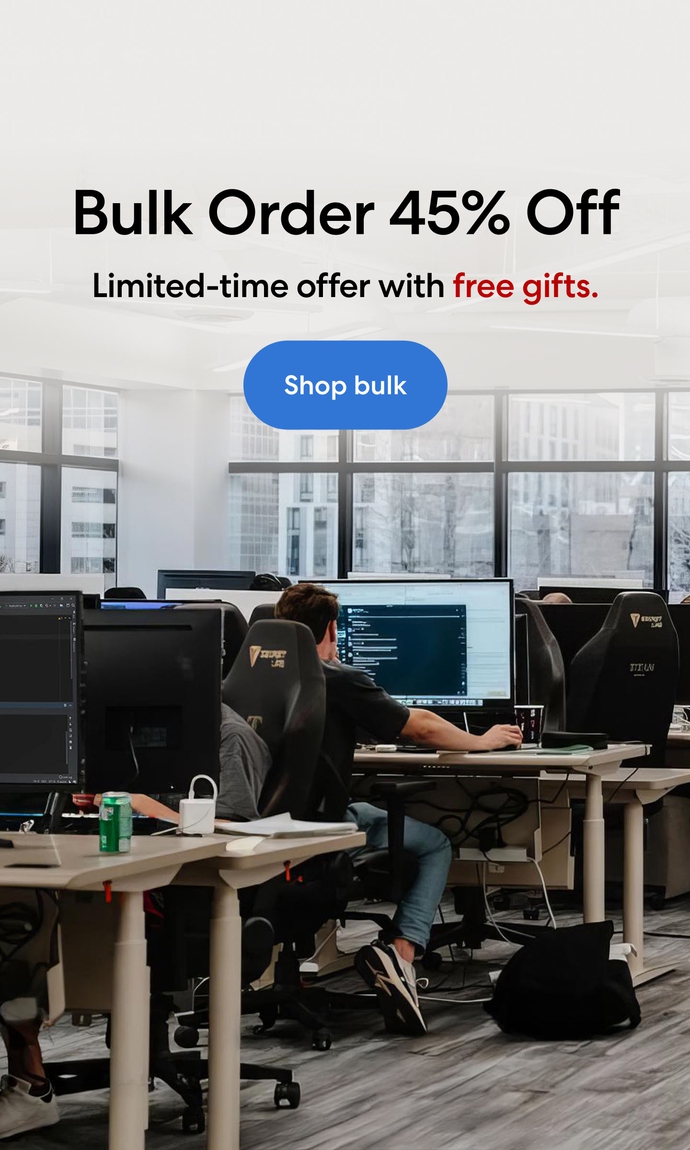

The digital world came to a grinding halt on October 20, 2025. It wasn't a cyberattack, nor was it a hardware failure. It was a seemingly minor DNS resolution error in DynamoDB's US-EAST-1 region that triggered a massive cascading failure. For over 15 hours, global services—including numerous companies running cutting-edge AI and machine learning workloads on Cloud GPU infrastructure—were paralyzed.

This event should serve as the definitive wake-up call: our industry's dependence on hyper-centralized cloud providers presents an existential risk. For AI, cloud is not the future, nor on-premise. It must be hybrid.

The Vulnerability of Centralization

The core function of modern AI, from the largest Large Language Models (LLMs) to specialized computer vision systems, relies on two main phases: training and inference. Both heavily utilize dedicated GPU clusters.

When US-EAST-1 struggled, the consequences rippled instantly:

- Training Disruption: Projects requiring massive, multi-day training runs faced slowdowns, data access issues (from S3), and potential halts, leading to significant loss of expensive compute time.

- Inference Failure: Services that rely on real-time, low-latency AI inference (like certain financial trading algorithms or advanced AI chatbots) simply stopped working. Because their models and databases were all tethered to the centralized cloud network, there was no backup, no redundancy, and no local failover.

The outage exposed the Achilles' heel of the current cloud model: the risk of a single point of failure. The enormous benefit of scale comes with an equally enormous risk of total global service disruption.

.webp)

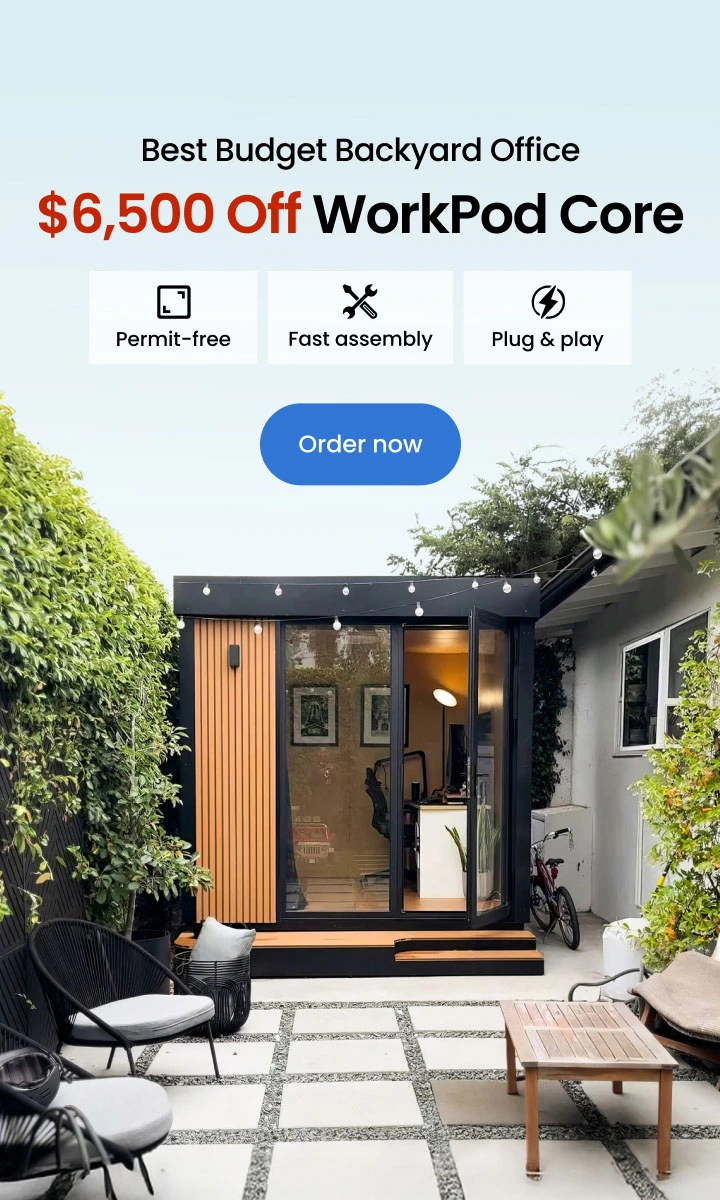

Shifting the Paradigm: Cloud for Power, Edge for Endurance

We must move beyond the binary thinking of "Cloud vs. On-Premise." The critical differentiation is between the needs of Training and the needs of Inference.

1. The Cloud Advantage (Training)

The hyperscalers—AWS, Azure, GCP—remain the superior choice for AI Training. They offer virtually unlimited elasticity, a massive catalog of specialized GPU instances, and an OpEx (operational expenditure) model that avoids upfront costs. When you need to train a 100-billion-parameter model, the sheer computational firepower and easy resource pooling of the cloud are indispensable.

2. The Edge/On-Premise Imperative (Inference)

For services that require sub-millisecond response times or cannot afford even minutes of downtime, Edge AI and Hybrid Infrastructure using products like Autonomous’ EdgeAI server are non-negotiable.

Edge AI—the deployment of GPUs and compute resources closer to the data source or end-user—offers two decisive advantages:

- Latency Control: Inference requests don't need to travel across regions or continents to a centralized data center, drastically improving response times.

- Resilience: An Edge or On-Premise GPU environment is completely isolated from the fate of US-EAST-1. If a cloud region goes down, your core product's real-time functionality remains active. This separation of concerns guarantees uptime for mission-critical tasks.

.webp)

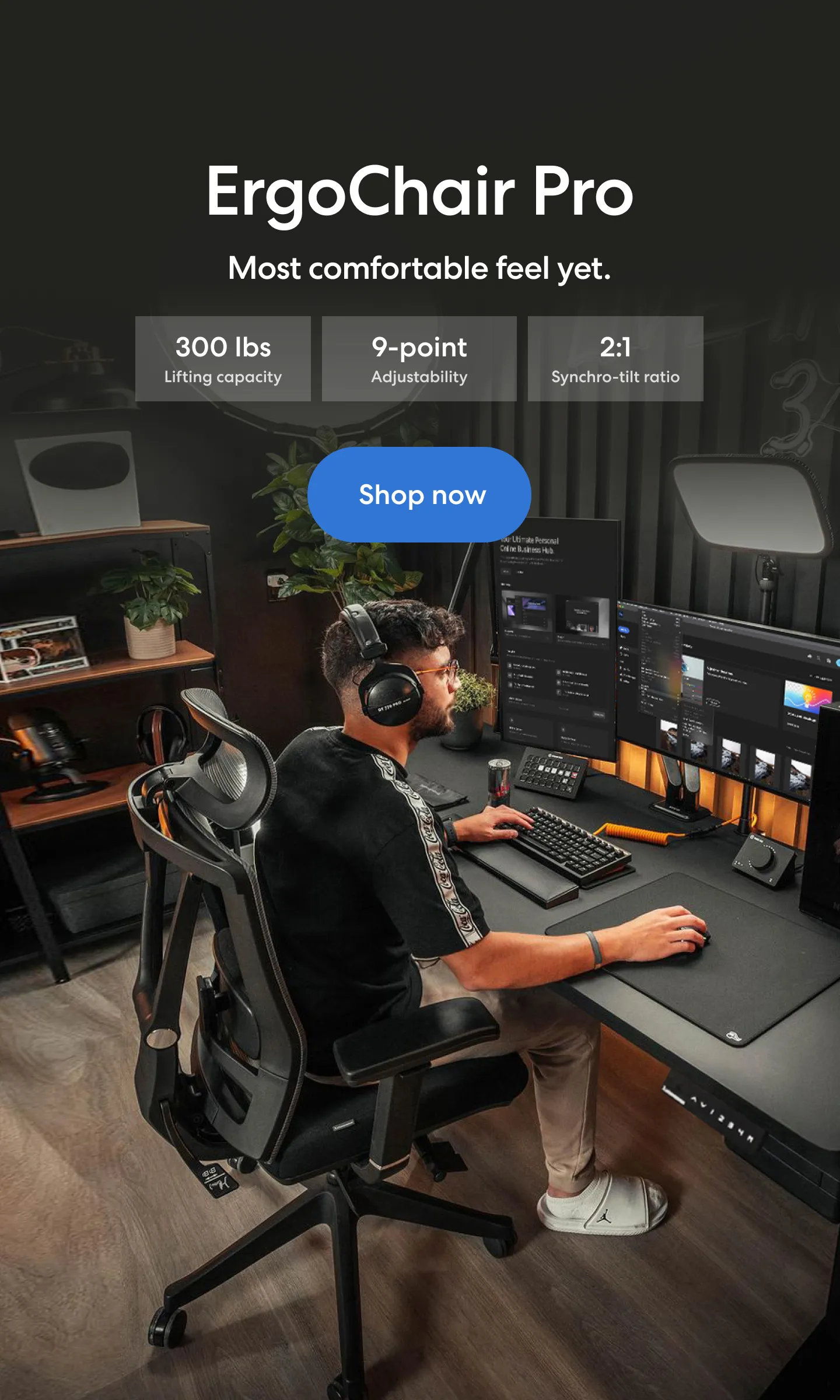

The Hybrid AI Mandate

The path forward for enterprise AI is not a retreat from the cloud, but an intelligent integration of both environments.

Organizations should adopt a Hybrid AI Strategy where:

- Cloud is the primary engine for Model Development, Massive Data Storage, and Training.

- Edge/On-Premise serves as the Execution Layer for Critical Inference.

This approach hedges against the "Cloud Paradox." It allows companies to exploit the vast scale and cost efficiencies of the cloud while simultaneously building a robust, geographically distributed layer of resilience to protect their most vital services from regional failures.

The 2025 AWS outage was a painful lesson, but it provides a clear roadmap. To ensure the reliability of the next generation of AI applications, we must distribute power, embrace redundancy, and fully commit to the Hybrid Infrastructure model.

Spread the word

.svg)