How to Run AI Locally: Benefits, Challenges, and Solutions

Table of Contents

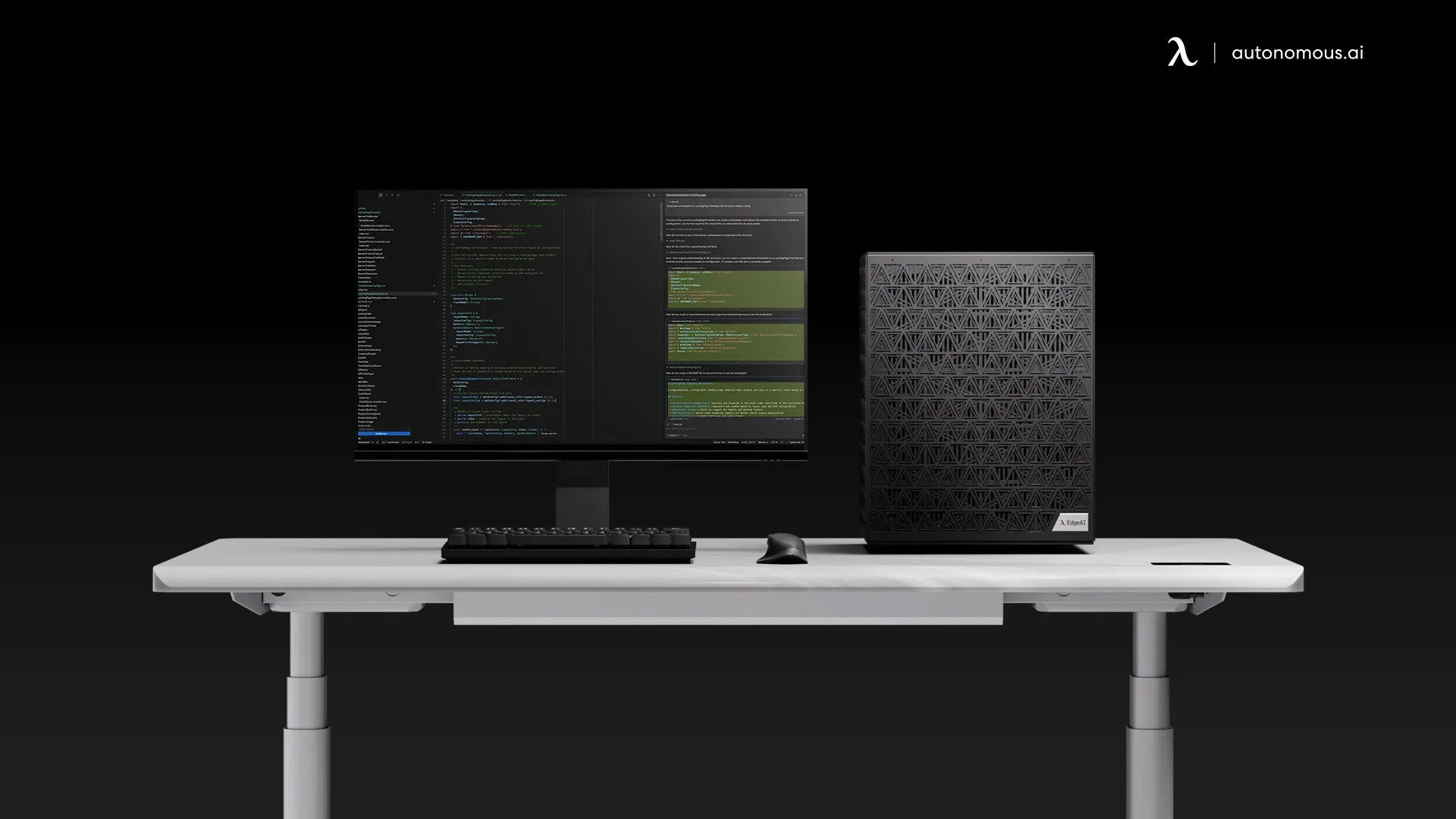

With the rise of artificial intelligence (AI) in multiple industries, businesses are increasingly looking for ways to deploy and run AI models efficiently. Running AI locally, instead of relying solely on cloud infrastructure, has become a game-changer for many organizations seeking faster, more secure, and cost-effective solutions. Local AI computing enables companies to handle large datasets and run complex models without the need for constant internet connectivity or cloud storage.

This article will explore the growing trend of running AI locally, its key benefits, and how you can implement AI locally with solutions like the EdgeAI Computer. Whether you're training machine learning models, performing data analysis, or building predictive systems, running AI locally can unlock significant advantages in terms of performance, privacy, and cost.

What Does "Run AI Locally" Mean?

Running AI locally refers to the process of executing artificial intelligence models directly on devices or servers within an organization's premises rather than relying on cloud-based systems. This typically involves using high-performance hardware such as GPUs (Graphics Processing Units) and specialized AI chips to process data, train models, and make decisions in real-time without needing an internet connection or external cloud infrastructure.

For businesses, running AI locally offers several benefits. It allows for immediate data processing, reduced latency, and better control over sensitive information, as no data needs to be transferred to the cloud. With the EdgeAI Computer, for instance, organizations can deploy advanced AI systems locally, ensuring security, scalability, and high performance in a single on-premise solution.

Why Running AI Locally is Beneficial

Running AI locally comes with several advantages that make it particularly appealing for businesses, developers, and organizations working on demanding AI tasks. Let’s break down the key reasons why local AI processing is a game-changer.

- Enhanced Privacy and Security

When you run AI models locally, all data remains within your infrastructure. This ensures that sensitive information, such as personal details or financial records, doesn’t leave your organization, reducing the risk of data breaches or unauthorized access. For industries that handle highly confidential data, such as healthcare or finance, this local processing can help ensure compliance with privacy regulations like GDPR or HIPAA.

- Reduced Latency

In AI-driven applications, real-time data processing is crucial. Running AI locally eliminates the need for data to travel back and forth to the cloud, which can introduce significant delays. This is especially important for applications requiring immediate responses, such as in autonomous vehicles, industrial automation, or even in smart cities where decisions need to be made instantly based on real-time data.

- Cost Savings Over Time

While the initial investment in local AI infrastructure may seem high, running AI locally can save money in the long term. Businesses don’t have to pay ongoing cloud fees for data transfer, storage, or computational power, which can add up quickly with large datasets. Additionally, local AI systems allow for better resource allocation, making them more cost-effective for organizations that require continuous AI processing.

- Scalability and Flexibility

Running AI locally provides greater control over the hardware and software used for AI workloads. This flexibility allows businesses to scale their systems up or down based on their specific needs without relying on the limitations or pricing models of cloud providers. Local processing also supports integration with existing infrastructure, making it easier to implement AI solutions across a wide range of industries.

- Customization of AI Models

By running AI locally, businesses have the ability to tailor AI models specifically to their use cases. Instead of using generalized cloud-based AI models, organizations can fine-tune and optimize AI systems to perform better with their unique datasets, resulting in more accurate predictions and insights.

.webp)

How to Run AI Locally: Key Considerations

Running AI locally can significantly improve your operational efficiency, but there are several important factors to consider before setting up your AI infrastructure. Let’s explore what you need to know to run AI models on local machines effectively.

1. Hardware Requirements

Running AI locally requires powerful hardware capable of processing large datasets and running complex algorithms efficiently. The key hardware components include:

- GPUs:

As we’ve discussed, GPUs are essential for AI workloads due to their parallel processing capabilities. High-performance GPUs, such as NVIDIA’s RTX 4090 or Tesla series, are commonly used for deep learning and AI tasks. These GPUs are often incorporated into advanced AI PCs, enabling faster computation for complex algorithms.

- CPUs:

While GPUs handle most of the heavy lifting, a robust CPU is still essential for overall system performance and managing general tasks. Understanding the role of GPUs vs CPUs for AI can help optimize system architecture and improve machine learning efficiency.

- Memory (RAM):

Adequate RAM is necessary to support AI training processes, especially when handling large datasets. The more memory available, the better the system can perform without slowing down.

- Storage:

SSD storage is essential to quickly load and save large datasets, as AI models can require substantial disk space. High-speed storage ensures faster data access and retrieval during training and inference.

Additionally, AI PC Assistants can further enhance the experience by streamlining tasks and improving workflow efficiency.

2. Software and Framework Compatibility

Running AI locally requires the proper software stack and libraries that can efficiently interact with your hardware. Popular frameworks include:

- TensorFlow: One of the most widely used frameworks for deep learning. It integrates seamlessly with NVIDIA GPUs, making it a great choice for running models locally.

- PyTorch: Another powerful deep learning framework, offering strong GPU acceleration and flexibility for model experimentation.

- CUDA: NVIDIA's parallel computing platform is essential for running AI models efficiently on GPUs. It provides the necessary tools and libraries to accelerate AI workloads.

- cuDNN: A GPU-accelerated library for deep neural networks, which works alongside CUDA to enhance performance in AI applications.

Having the correct versions of these libraries installed on your local machines ensures smooth performance and allows you to fully leverage the power of your hardware.

3. Data Management and Processing

Data handling is a critical part of running AI locally. Efficient data management involves:

- Data Preprocessing: Before training models, data must be cleaned, transformed, and formatted. Running preprocessing tasks locally ensures faster data pipeline execution and reduces the dependency on external cloud services.

- Data Storage: Consider how you will store and organize your datasets. Local storage or on-premises data management systems can support faster data retrieval compared to cloud-based services.

- Data Security: Local data processing gives you full control over your data, making it easier to implement security measures like encryption and access control. With the right enterprise search tools, you can manage and secure your data while improving search efficiency across your organization.

4. Cooling and Power Supply

AI models, especially those running on GPUs, require significant power and generate heat. Proper cooling systems are essential to prevent hardware from overheating, especially in continuous, 24/7 AI training environments. Additionally, ensure that your power supply can handle the demands of high-performance AI computing.

5. Scalability and Expansion

When running AI locally, consider how easy it will be to scale up your operations. Businesses should plan for future expansion by ensuring that their infrastructure supports adding more GPUs or other hardware components as needed. Scalable solutions allow you to grow without facing limitations that could hinder your machine learning and AI progress.

.webp)

The Challenges of Running AI Locally

Running AI locally has many advantages, but it also comes with its unique set of challenges. These challenges primarily revolve around hardware limitations, data management, and integration with existing systems. Here are the key challenges you may face:

1. Hardware Limitations

AI models, especially deep learning models, require substantial computational power. While GPUs like the NVIDIA RTX 4090D are powerful, the cost of purchasing and maintaining high-end hardware can be prohibitive for smaller businesses or independent developers. Additionally, GPUs have physical and performance limitations that could affect scalability as models grow more complex.

For businesses starting out, it’s important to consider what kind of GPUs will provide the best performance for machine learning tasks, with options like the best GPU for machine learning offering a great balance between performance and cost. Similarly, when working with large-scale data and complex models, high-performance GPUs like the best GPU for deep learning become essential for meeting the demands of advanced computations.

2. Data Storage and Management

Running AI locally means managing large datasets on-site, which requires significant storage capacity. Storing and maintaining these datasets can become a challenge, particularly when handling high-resolution data (e.g., images, video) or large volumes of real-time data. Proper infrastructure needs to be in place for fast data access, and security measures must be adopted to prevent data loss.

3. Power Consumption

High-performance GPUs, particularly for deep learning tasks, require a lot of power. Ensuring that the local AI system runs efficiently without spiking operational costs is a challenge. Additionally, the GPU can produce significant heat, so proper cooling systems are necessary to maintain performance and extend the lifespan of the hardware.

4. Maintenance and Upkeep

Running AI locally requires businesses to handle hardware maintenance themselves. This can involve troubleshooting hardware failures, performing software updates, and scaling the system as AI tasks become more demanding. For organizations without an in-house IT team, this can add operational overhead.

Solutions for Running AI Locally

When running AI locally, businesses face several challenges, but these can be effectively addressed with the right hardware solutions. The EdgeAI Computer is specifically designed to tackle these challenges, offering scalability, robust data security, and optimized efficiency for demanding AI workloads.

- Scalability:

As AI workloads grow, so does the need for scalable computing resources. The EdgeAI Computer offers flexible configurations with up to 8 NVIDIA RTX 4090D GPUs, allowing businesses to scale up their AI infrastructure as needed without relying on cloud providers.

- Data Security:

For industries dealing with sensitive data, such as healthcare or finance, data security is critical. The EdgeAI Computer ensures that all data processing happens locally, meaning no data leaves your premises, significantly reducing the risk of data breaches and ensuring compliance with privacy regulations. This local data processing is a prime example of Edge AI in action, offering enhanced control over data security.

- Efficiency:

The EdgeAI Computer is built for high-performance AI processing, enabling businesses to run complex models and large datasets without the latency and bandwidth issues associated with cloud computing. With over a petaflop of AI performance, businesses can achieve faster training times and real-time decision-making. Many Edge AI applications, such as real-time analytics and autonomous systems, benefit greatly from this type of local processing power.

Comparing Local AI vs. Cloud AI

Choosing between local AI solutions and cloud-based AI depends on several factors, such as budget, performance needs, and privacy concerns. Let’s take a look at the pros and cons of each:

Feature | Local AI | Cloud AI |

Performance | High performance, particularly with GPUs like NVIDIA RTX 4090D. | Scalable performance but may suffer from latency. |

Privacy | Data stays local, offering full control and better privacy. | Data may be stored off-site, raising privacy concerns. |

Cost | Higher upfront costs but no ongoing cloud fees. | Lower initial investment but can be expensive over time due to usage fees. |

Scalability | Limited by hardware; requires upgrades. | Highly scalable with cloud resources. |

Maintenance | Requires local IT support and hardware management. | Managed by cloud service providers, reducing IT overhead. |

For businesses needing high-performance AI without the ongoing costs of cloud services, local AI systems like the EdgeAI Computer offer a more affordable, secure, and efficient option. Edge AI vs Cloud AI highlights the key differences between local and cloud-based solutions, emphasizing the unique benefits of Edge AI in terms of data privacy and cost efficiency. Cloud AI, on the other hand, is ideal for businesses that require scalability and don’t want to worry about hardware management.

FAQs

Can I run AI locally without a cloud connection?

Yes, with the right hardware, such as the EdgeAI Computer, you can run AI models and perform tasks locally without needing to connect to the cloud.

What are the advantages of running AI locally?

Local AI systems offer faster decision-making, better data privacy, and lower long-term costs compared to cloud-based AI systems, making them ideal for real-time applications.

How does local AI compare to cloud AI in terms of performance?

Local AI provides high performance, especially for real-time applications, by using powerful GPUs. Cloud AI, while scalable, can introduce latency due to data transfer, especially for time-sensitive tasks.

Is running AI locally more cost-effective than cloud computing?

Yes, running AI locally can be more cost-effective in the long run, as it eliminates the ongoing fees associated with cloud services. Local systems also avoid bandwidth costs and provide better control over resources.

Conclusion

Running AI locally has clear advantages, including faster decision-making, better privacy, and more control over resources. However, it also comes with challenges related to hardware requirements, data management, and ongoing maintenance. By choosing the right local AI solution, such as the EdgeAI Computer, businesses can effectively manage large AI workloads without relying on cloud infrastructure.

For businesses looking for a cost-effective and high-performance solution for deep learning and other AI tasks, local AI systems offer a compelling alternative to cloud computing. By investing in scalable and efficient local solutions, companies can ensure that they are well-equipped to meet their AI needs for years to come.

Spread the word

.svg)