.webp)

From Screens to Servos: Why Robots play an important role in the AI World

Table of Contents

If you are learning Artificial Intelligence (AI) today, your world likely exists within a browser window. You are probably comfortable with Python notebooks, you’ve experimented with ChatGPT wrappers, and you might even rank well in Kaggle competitions.

That is fantastic progress. But it’s also incomplete.

There is a massive difference between an AI model that can identify a cat in a JPEG file and an AI agent that can navigate a cluttered living room to find a real cat without bumping into the furniture.

The first is "AI in a box" - impressive, but isolated. The second is Embodied AI - intelligence that has a physical body and interacts with the real world. If you want to future-proof your skills as a developer or researcher, it’s time to step away from the screen and give your code a body.

Here is why the future belongs to those who can bridge the gap between digital algorithms and physical reality.

The "Sim-to-Real" Gap: Reality is Messy

In a computer simulation, gravity is constant, lighting is perfect, and sensors never fail. If your simulated robot hits a wall, it’s usually because of a bug in your math.

The real world is not so forgiving. It is chaotic, noisy, and unpredictable.

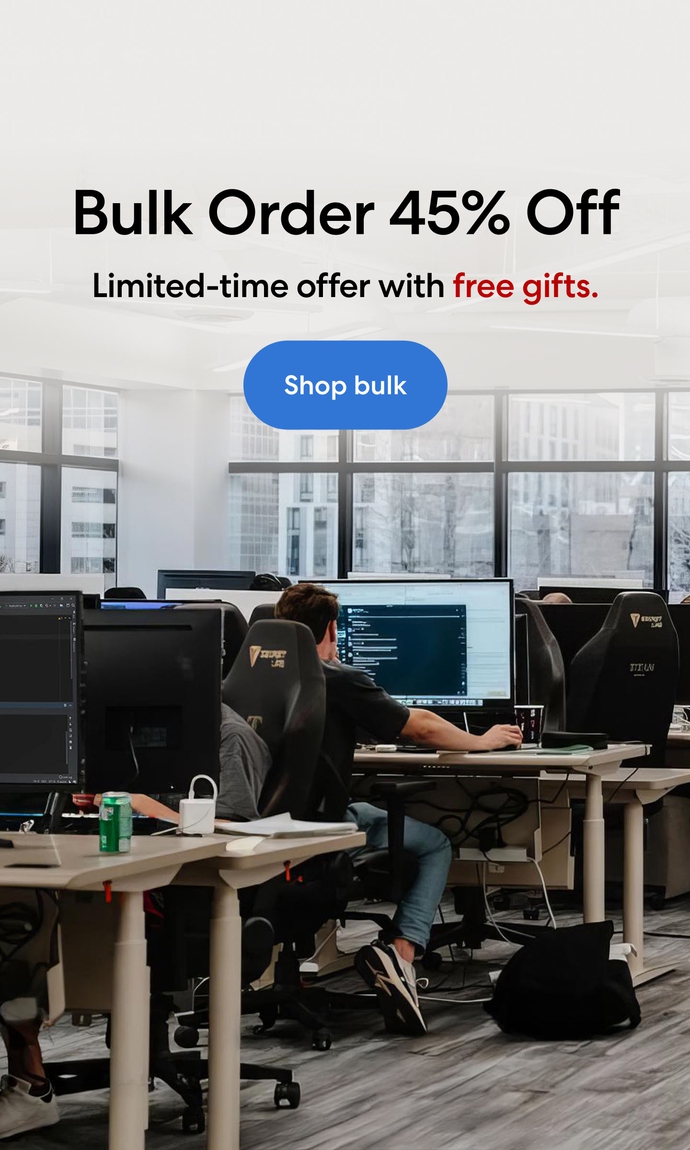

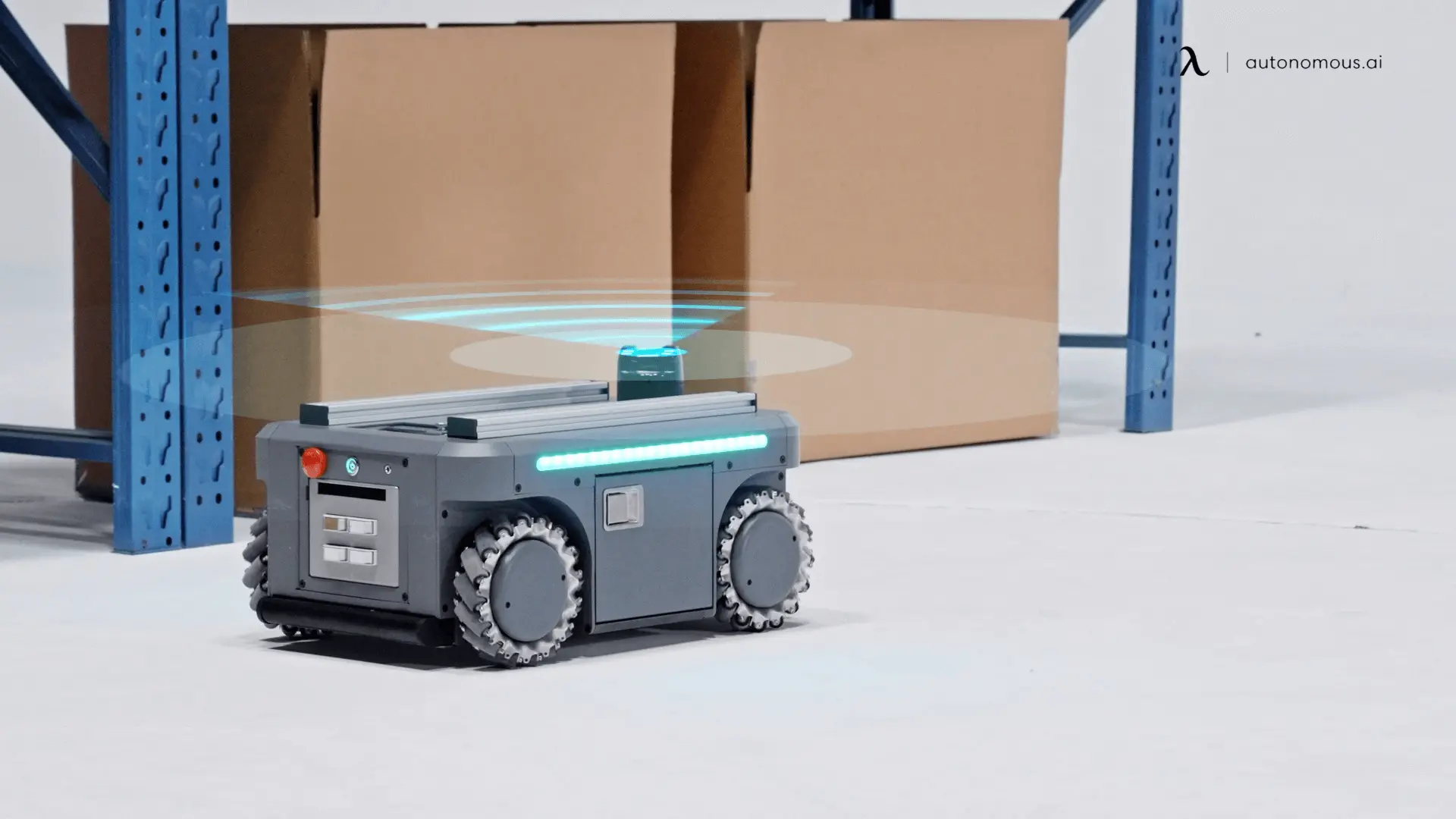

When you deploy code onto a physical robot, like the autonomous mobile platform myAGV or Hacky, you suddenly face challenges that don't exist on a screen:

- Sensor Noise: LiDAR and cameras aren't perfect; they give you "fuzzy" data that your AI must filter.

- Physics & Friction: Wheels slip on different floor types; motors don't always spin at the exact speed you tell them to.

- Dynamic Environments: People walk by, shadows shift, and objects get moved.

Not like in the sim environment, the real world is chaotic, noisy and unpredictable with friction, gravity, imperfect lighting,...

Dealing with this "noise" is what turns a coder into a robotics engineer. Learning to build robust algorithms that can handle the chaos of reality is a skill that cannot be learned in a pure simulation.

Mastering Spatial Intelligence (More Than Just Pixels)

On a screen, computer vision is mostly 2D pattern recognition. In robotics, it becomes a 3D survival skill.

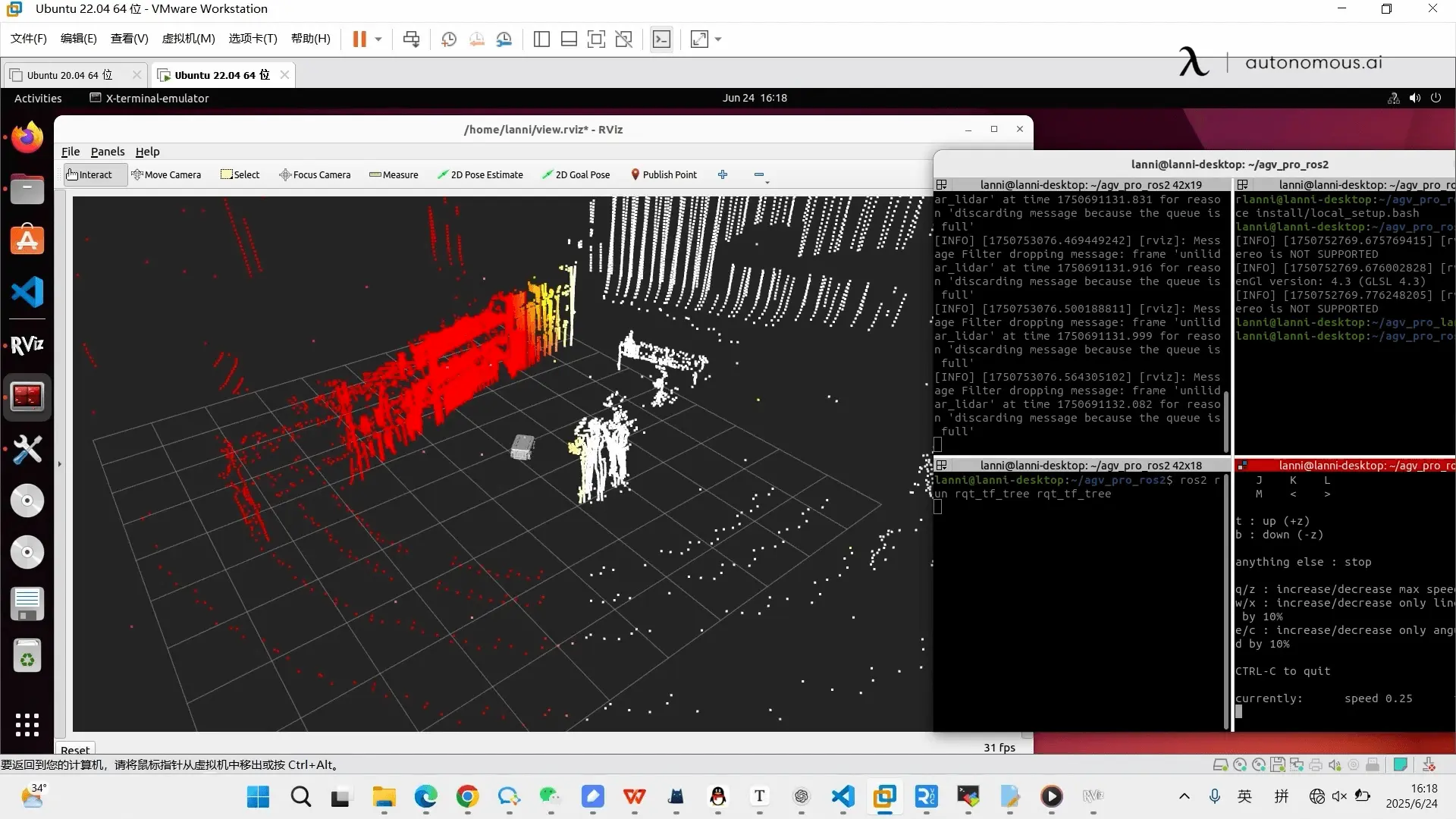

Working with a mobile robot forces you to tackle Spatial Intelligence. You need to learn concepts like SLAM (Simultaneous Localization and Mapping), the complex process where a robot uses sensors like LiDAR to determine its location while simultaneously creating a map of its surroundings.

An AI on a server doesn't need to know "where" it is. An autonomous robot that doesn't know its location is just an expensive paperweight.

The "Hacky" Spirit and Edge AI: The Brain on Board

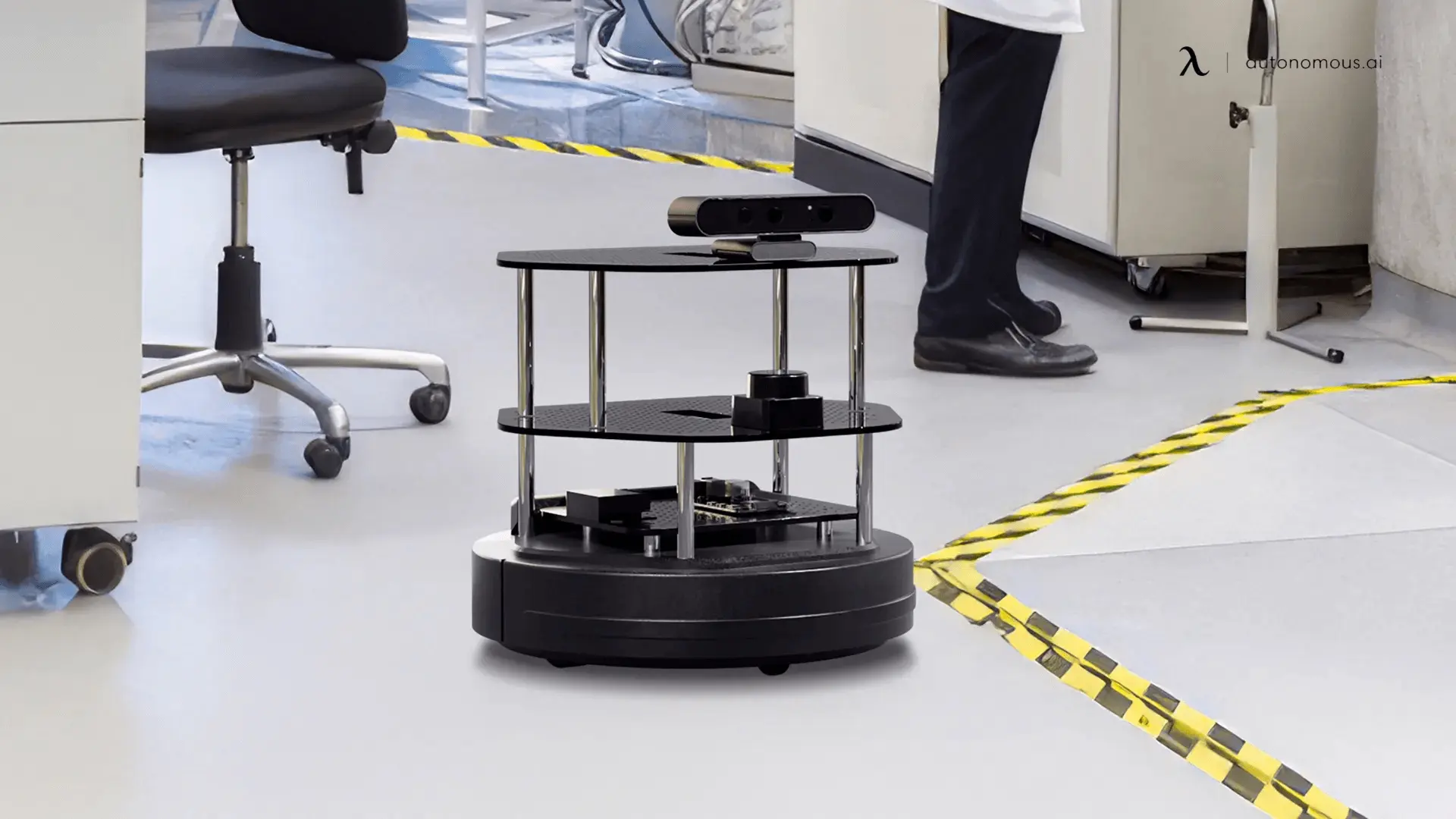

Real-world robotics isn't just about polished, finished products. It’s about the "hacky" spirit of engineering - the ability to tinker, modify, break, and rebuild.

Platforms in the Elephant Robotics ecosystem are designed specifically for this open-source, "hacky" approach. They run on accessible hardware like Raspberry Pi or NVIDIA Jetson Nano, giving you root access to the system. This means you aren't locked into a vendor's walled garden; you can hack the code, attach strange new sensors, and push the hardware to its limits.

This leads directly to one of the most critical skills in modern robotics: Edge AI.

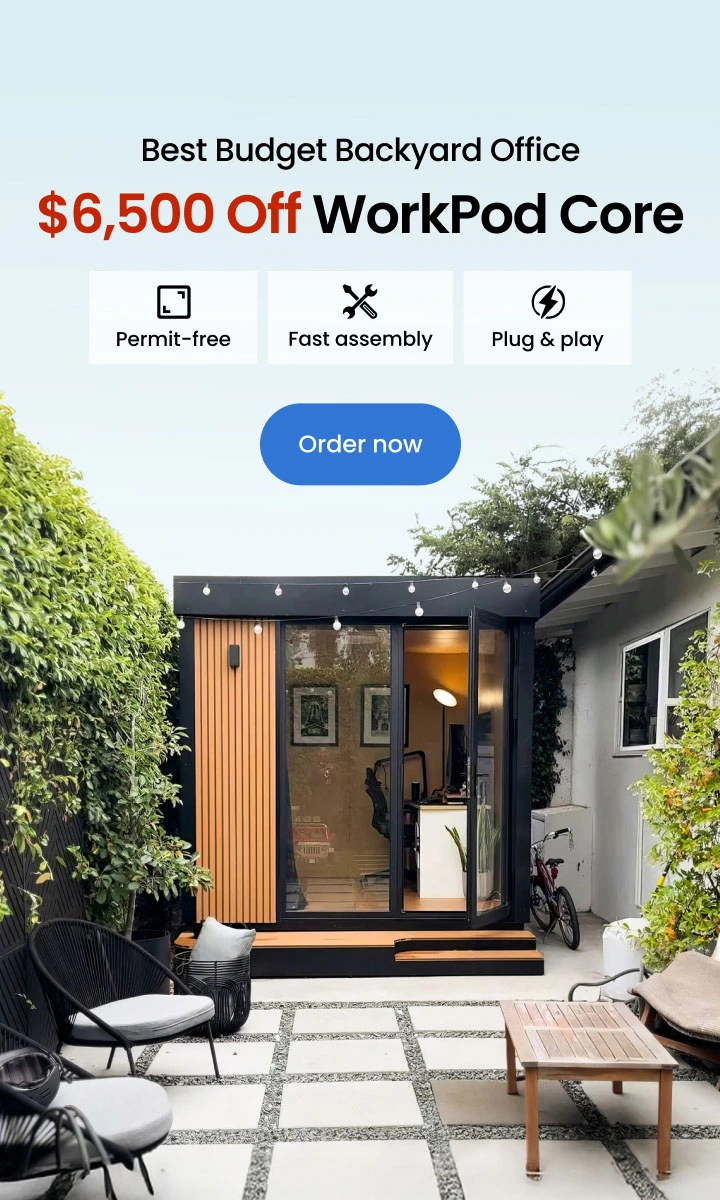

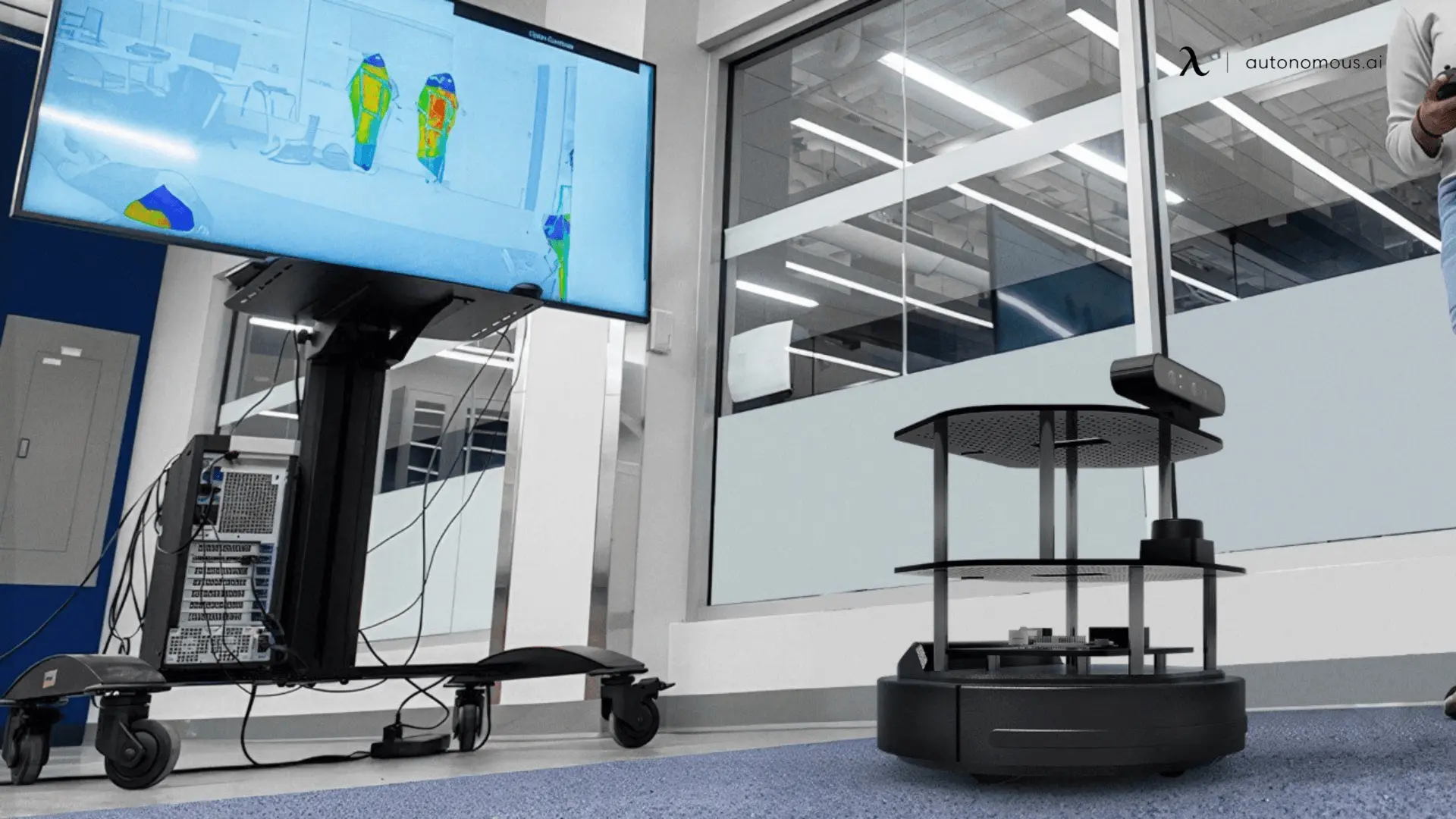

While the robot is the "body," every body needs a powerful "brain." Traditionally, developers relied on the cloud, but the future is moving toward Local Intelligence. A robot in a warehouse or a field cannot always wait for a slow Wi-Fi connection to send data to the cloud for processing. It needs to make split-second decisions right there, on the "edge."

Edge AI can help your bots make split-second decision and act in real-time without the latency of the cloud

By working with these physical platforms, you learn how to optimize heavy deep-learning models so they can run efficiently on limited, onboard hardware. Autonomous’ Edge AI brings data-center muscle to your desk with 192GB of GPU RAM, offering 96% savings over traditional cloud providers. It operates 100% on-premises for total privacy, letting you run "hacky" experiments or professional models 24/7 without an internet connection or a rising bill.

By connecting your robots to a local Edge AI hub, you create a private ecosystem where your AI can learn and act in real-time without the latency of the cloud.

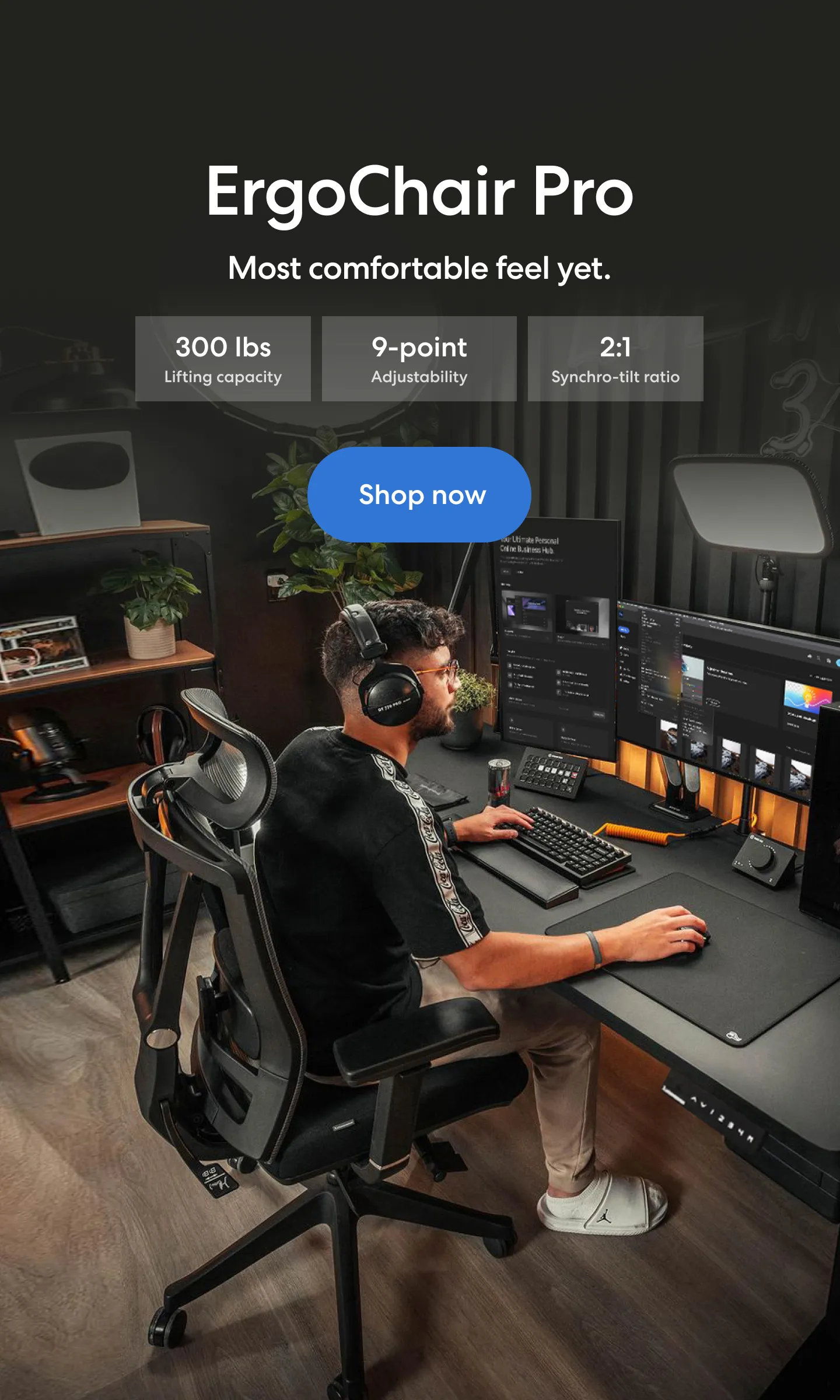

The Power of Manipulation: Giving Your AI Hands

Finally, true Embodied AI isn't just about looking and moving; it's about changing the environment.

Adding a robotic arm, like the 6-axis myCobot, introduces exponential complexity. Now your AI needs hand-eye coordination. It must use a camera (OpenCV) to recognize an object, calculate the precise "inverse kinematics" required to move the arm joints to that location, and determine the correct torque to pick it up without crushing it.

Combining mobility (myAGV or Hacky) with manipulation (myCobot) creates a "Mobile Manipulator." This is the holy grail of flexible automation, and programming one is perhaps the ultimate final exam for any AI student.

Bringing the codes from sim-to-real life is the ultimate final exam for any AI student

“Open-source AI tools and robotics do sound intriguing,” said Jim Burnham, STEAM Educator at Silicon Valley CTE High School. “I am trying to do more AI, machine learning, and machine vision. I want to build out some local, in-class AI processing capability to launch an AI high school class. It will be about students using AI, prompt engineering, and then lots of playing and training AI models.”

Don't Get Left Behind in the Digital World

The AI revolution is rapidly moving from our screens into our streets, warehouses, hospitals, and homes. The industry doesn't just need more people who can fine-tune an LLM; it desperately needs engineers who understand how software interacts with hardware in the physical world.

Don't let your skills remain trapped in a simulation. It's time to get your hands dirty, embrace the "hacky" side of engineering, and explore the potential of Edge AI.

Ready to give your code a body? Explore the open-source, modular robotics ecosystem at Autonomous.ai robot and start building for the real world today.

Spread the word

.svg)